ChatGPT vs. Bard vs. Claude 2: Which Is The Best?

Updated May 30, 2024

Published August 8, 2023

ChatGPT has been extremely popular since its launch in 2022, becoming almost synonymous with conversational AI. More recently, OpenAI has been facing competition from companies like Google and Anthropic, which have launched their competing products that do most of what ChatGPT is known for.

We decided to see if ChatGPT is still the ultimate conversational AI or if Google Bard and Anthropic’s Claude pose a serious threat. We put these competing conversational AIs through five rigorous tests to determine which AI leads the pack.

What Are ChatGPT, Bard, and Claude 2?

What is ChatGPT?

ChatGPT is a large language model developed by OpenAI. It is the best-known conversational AI. ChatGPT generates human-like text based on input.

There are two ChatGPT versions:

The free ChatGPT version gives you access to the GPT-3.5 model. This model is limited to generating conversational responses. You cannot upload, download, or interact with different file types.

ChatGPT Plus is ChatGPT’s paid plan. It gives you access to both GPT-3.5 and GPT-4 models. GPT-4 is more advanced than its predecessor. It can also access more advanced capabilities through plugins. For example, the Code Interpreter plugin supports file uploads, coding, image analysis, data visualization, and more.

Depending on the GPT model you use, ChatGPT has numerous applications, including answering questions, drafting emails, converting files, and much more.

What is Bard?

Bard (also Google Bard) is a conversational AI chatbot designed by Google AI. This AI model deeply understands language, including text and code.

You can use Bard for many tasks, including answering questions, generating code, and translating text. Like ChatGPT and Claude, Bard is still under development, and new features are being added regularly.

What is Claude 2?

Claude 2 is the latest iteration of Anthropic’s AI assistant called Claude. It is designed mainly as a conversational assistant, focused on being “helpful, harmless, and honest.” Claude is trained using Constitutional AI techniques.

Constitutional AI is basically the artificial intelligence version of, say, the American Constitution. Cloud is trained with Constitutional AI, which respects certain principles like free speech, privacy, and due process. The main idea behind Constitutional AI is to create socially responsible and ethical AI models.

Note: We’ll use Claude and Claude 2 interchangeably throughout this article.

As you can tell, ChatGPT, Bard, and Claude are similar conversational AI models. It should be straightforward to compare the three AI assistants objectively.

To keep things balanced, we decided to evaluate the three AIs on the following:

- Token Limits

- Internet Access & Accuracy

- Coding

- Data Analysis & Visualization

- Complex Math Problem Solving

- Price

ChatGPT vs. Bard vs. Claude 2 Comparison

Token Limits

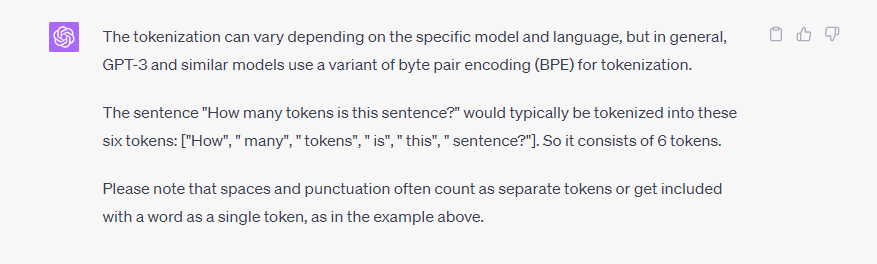

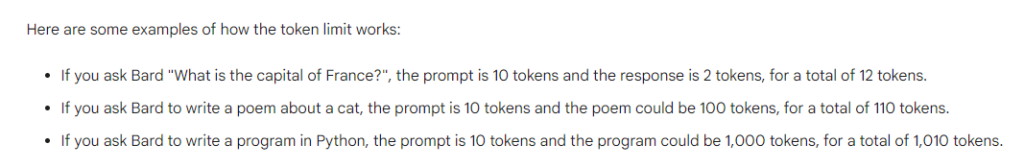

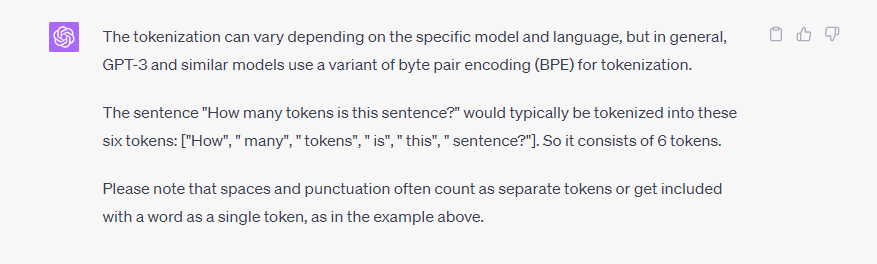

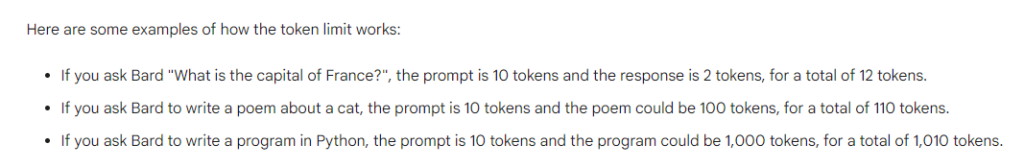

Large language models (LLMs) have a token limit. You can think of tokens as the word count equivalent for AI language models. A token can be as short as one character or as long as one word.

Therefore, a higher token limit allows you to create larger prompts. For example, a higher token limit is helpful if you need to summarize a large text.

The token limit includes the prompt and response. So, a higher token limit allows the AI model to give longer and more detailed answers for prompts that require an in-depth explanation.

Here’s the token limit for the three AI models:

- ChatGPT Token Limit – 4096 for GPT-3.5 and 8192 for GPT-4

- Bard Token Limit – 4,000

- Claude Token Limit – 100,000

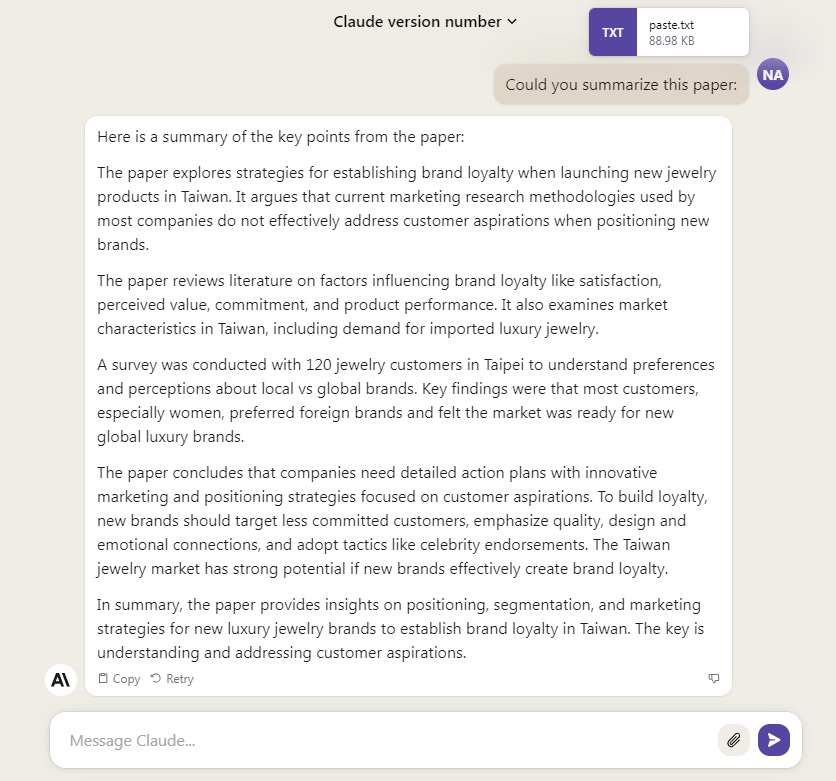

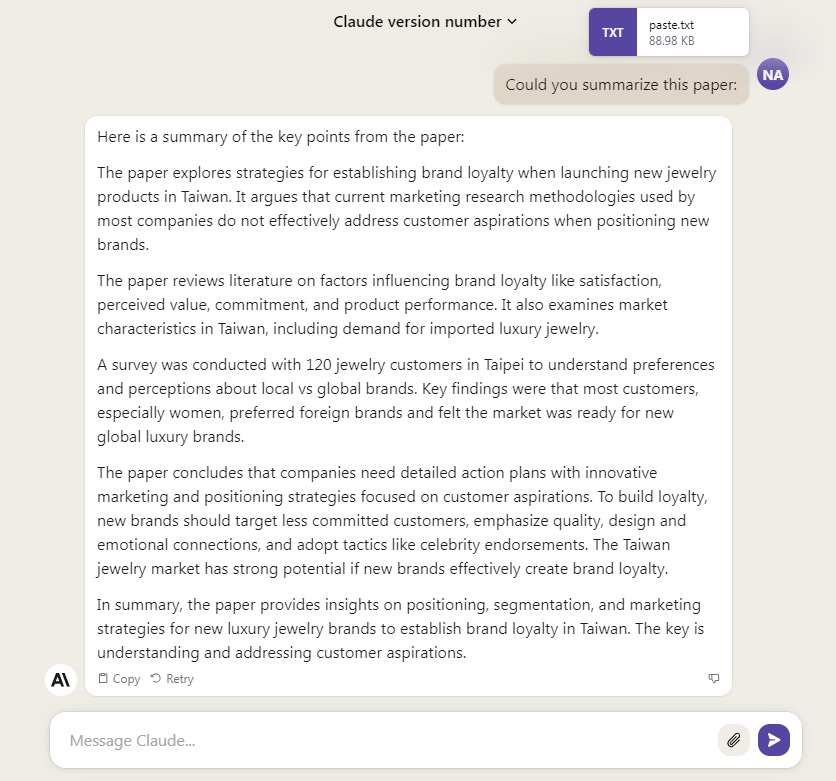

We did some tests to see how accurate these figures are. We asked each AI to summarize a 14,000+ words paper.

Claude quickly took up the challenge and provided a summary in a few seconds.

We tried the same experiment with GPT-3.5 and GPT-4. We exceeded the AI’s token limit, so we couldn’t complete this specific challenge.

Bard did summarize the text. However, the text cut off at 1578 words (mid-sentence!) in the chat box. Bard didn’t tell us that it cut a big chunk of the text to accommodate its token limit. So, we can’t rely on its summary.

Tip

Claude is the best option for processing large chunks of text or data. The next best option is ChatGPT, simply because it tells you when you’ve exceeded your token limit. Bard cuts the prompt to fit its token limit but doesn’t say so, which could lead to inaccurate results.

Internet Access & Accuracy

Internet access and accuracy go together. Internet access helps AI models like ChatGPT, Bard, and Claude provide accurate and up-to-date information.

For example, a conversational AI with internet access can access real-time information from sources like Google.

Claude doesn’t have internet access. So, you may need to double-check your information on Google, especially when searching for current information.

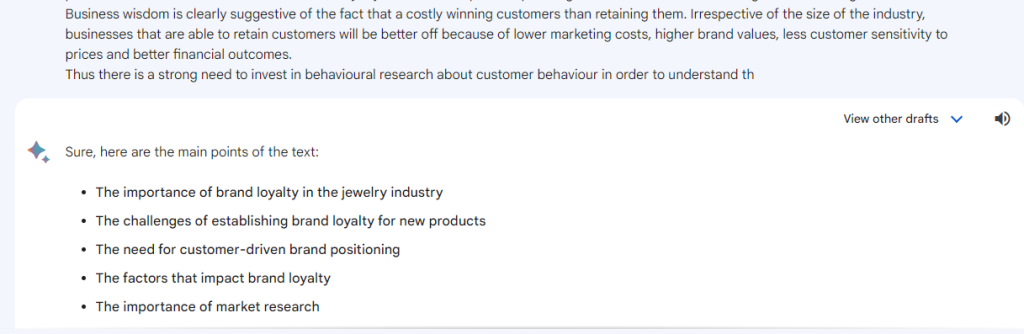

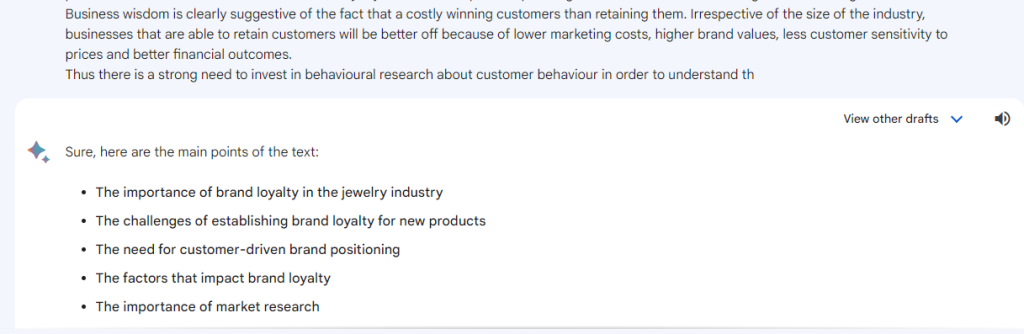

Here’s an example of why Claude’s lack of internet access is a big deal. We asked the AI who owns Twitter. The answer it provided was outdated. Claude says Twitter Inc. owns Twitter. In reality, Elon Musk acquired the company in October 2022.

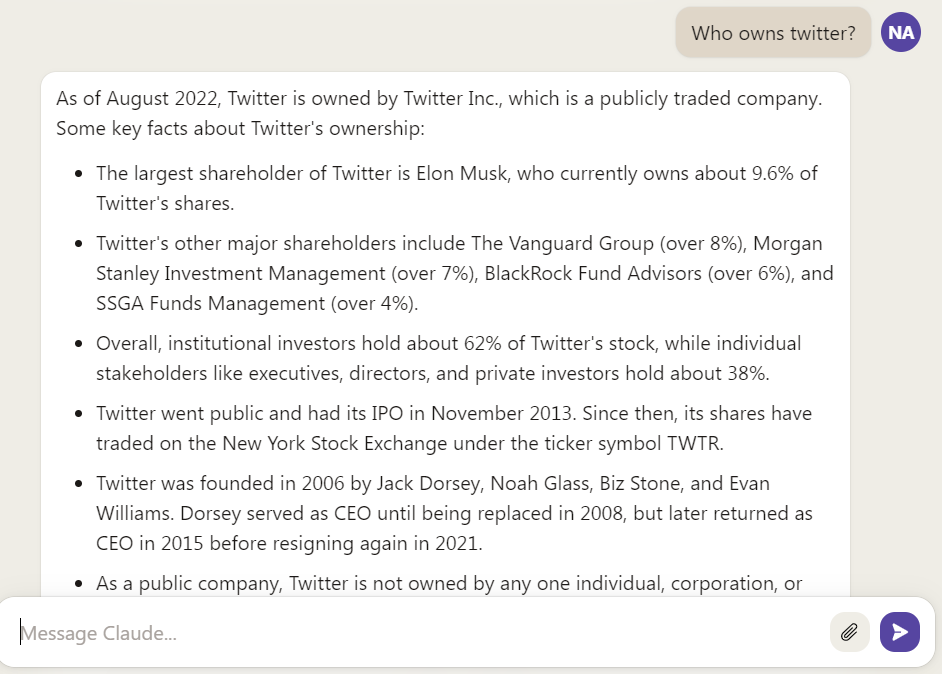

ChatGPT (both GPT-3.5 and GPT-4) also doesn’t have internet access by default. However, you can work around this limitation using plugins.

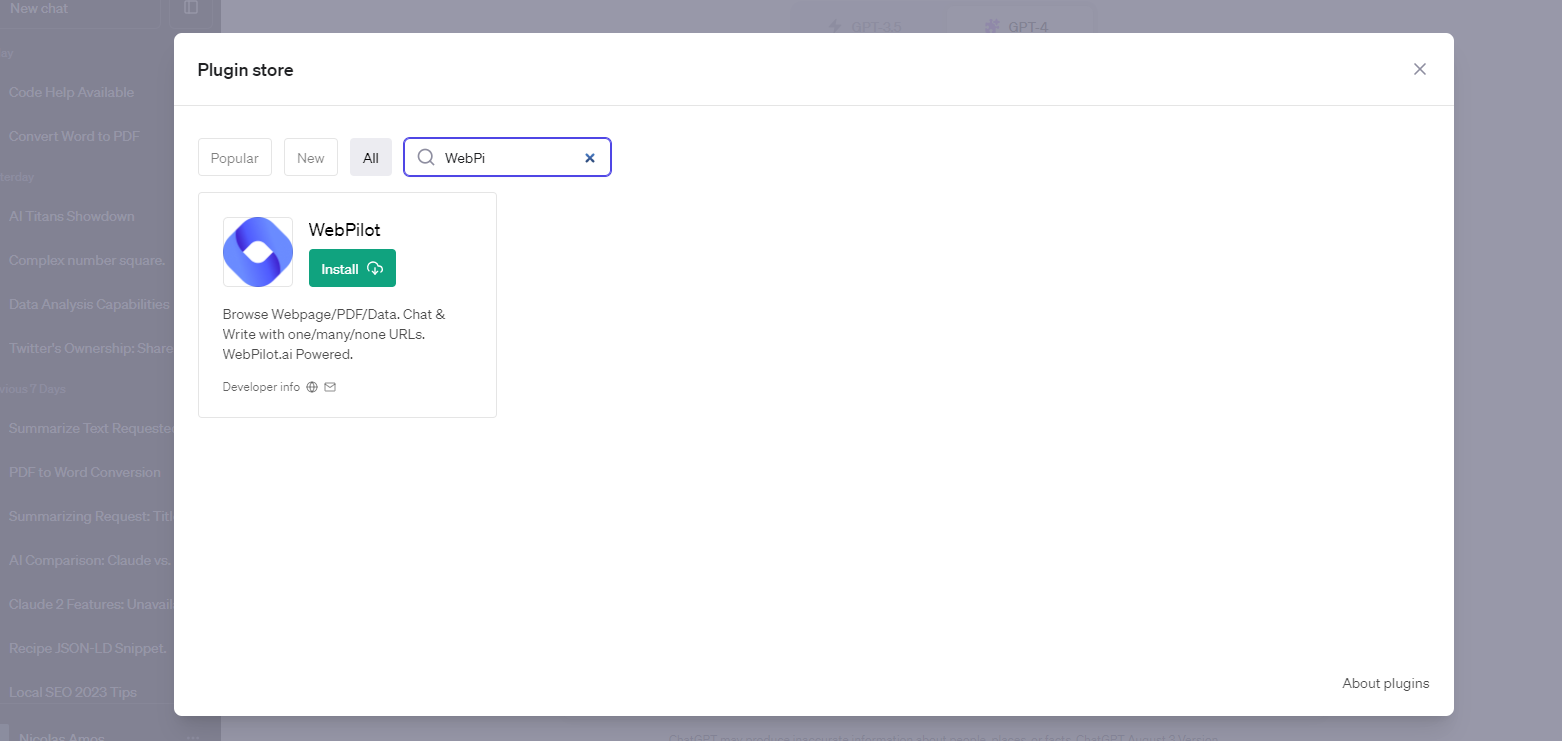

GPT-4 supports plugins. So you can use an extension like WebPilot to give it internet access. WebPilot is free, and it works seamlessly with ChatGPT.

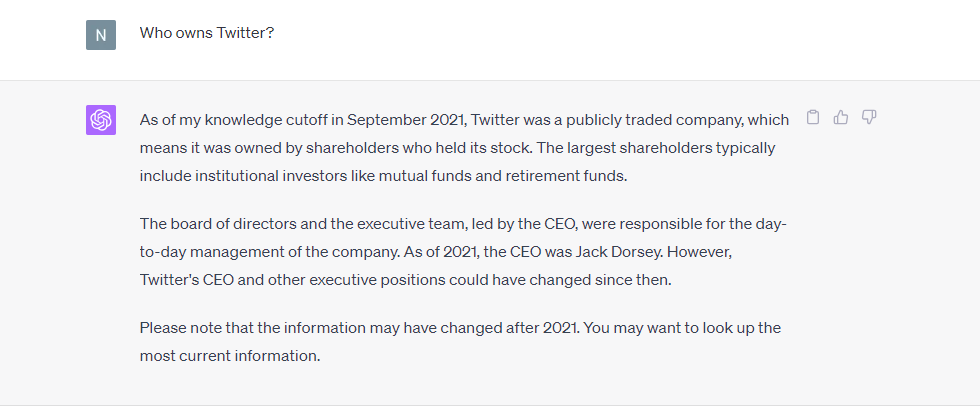

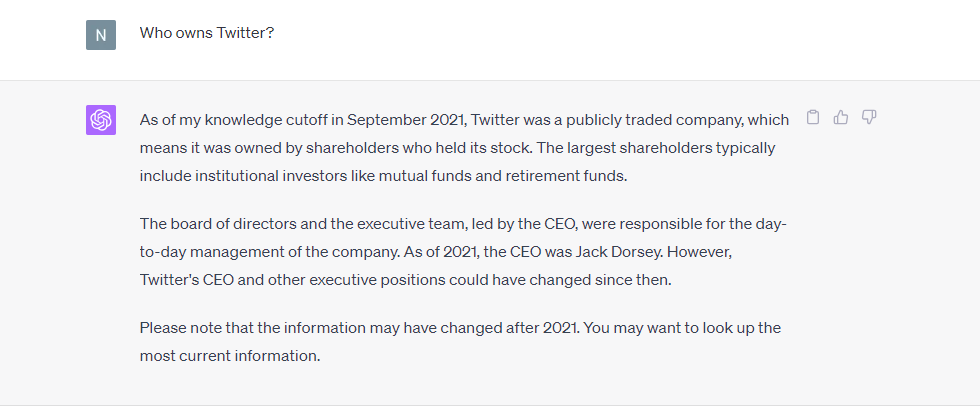

We tried ChatGPT without the plugin. GPT-3.5 and GPT-4 had the same issue as Claude when we asked who owns Twitter. Again, Elon Musk acquired the company in 2022, but GPT-3.5 and GPT-4 claim that it’s a publicly traded company.

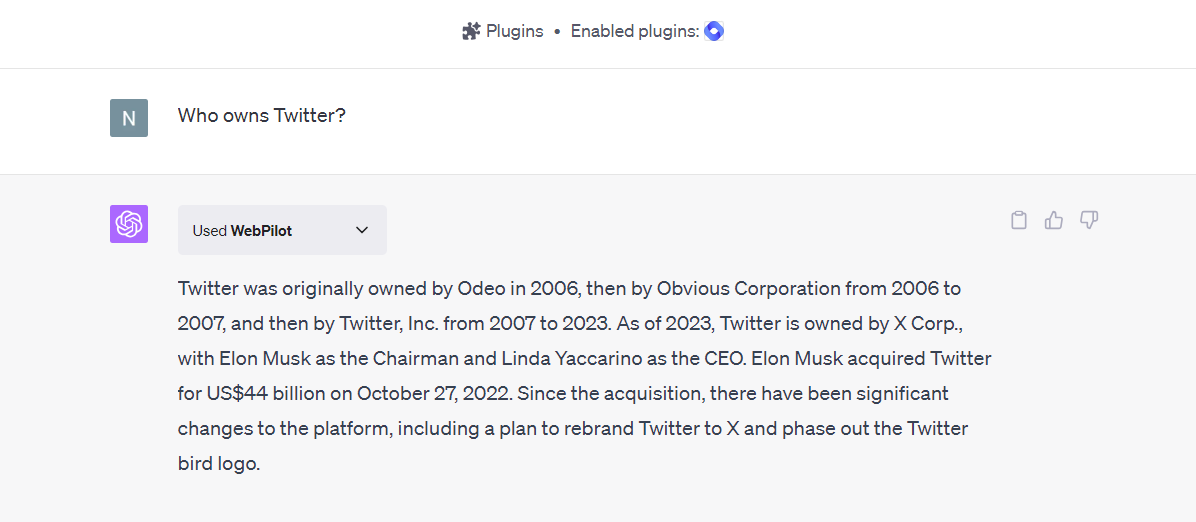

We tried again with the WebPilot plugin enabled in GPT-4. This time ChatGPT gave the correct and updated answer. You’ll also notice it says Used WebPilot in the little namespace just below the prompt.

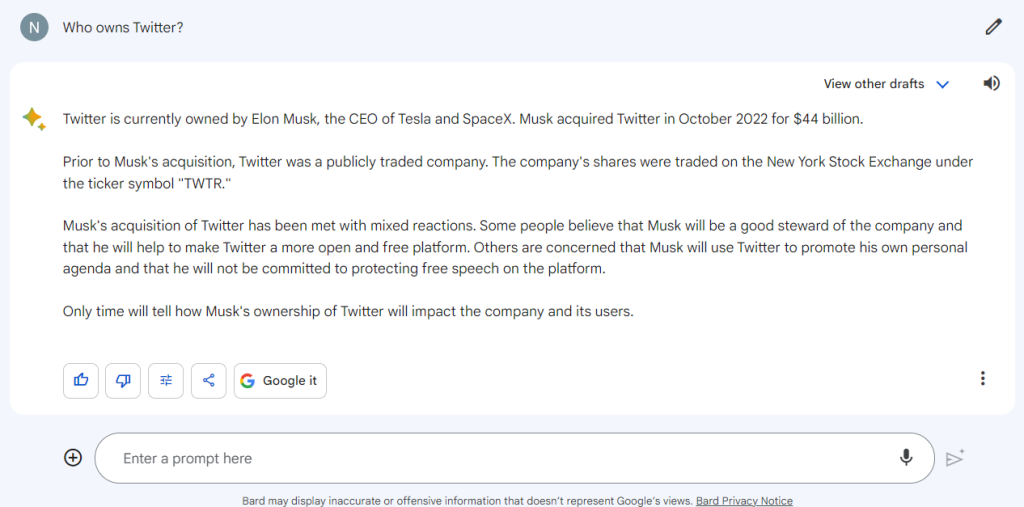

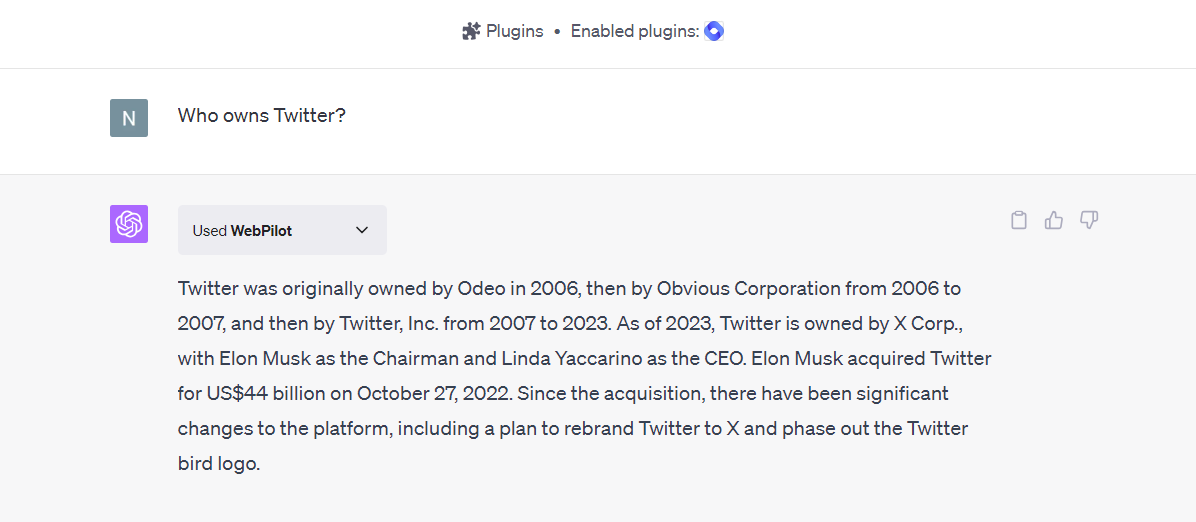

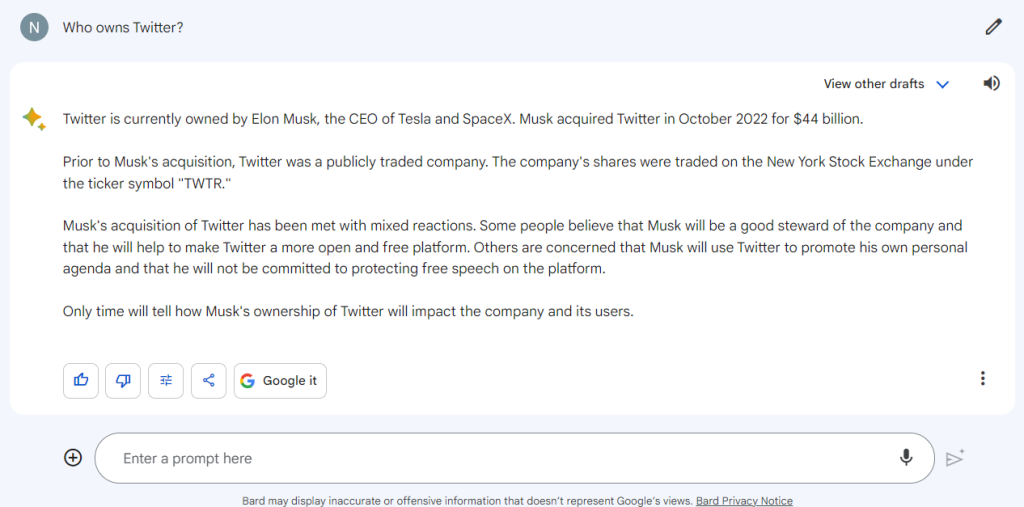

Google Bard AI does have internet access. We asked the same question. This time, the AI provided accurate information.

Bard also has a Google It feature. Clicking it lets you search related topics in Google Search.

Tip

Claude, ChatGPT, and Bard usually provide the correct information. However, Claude doesn’t have internet access. So, the information it provides might be outdated, depending on your query. ChatGPT doesn’t have internet access by default. But you can use GPT-4 (remember you need to sign up for ChatGPT Plus, the paid version) with a plugin like WebPilot to access the internet. Bard is the best option for accessing up-to-date data with no extra steps.

Coding Ability

All three AI models allow you to work with code. We decided to perform three tests on each assistant.

- Debugging code

- Writing new code

- Translating code

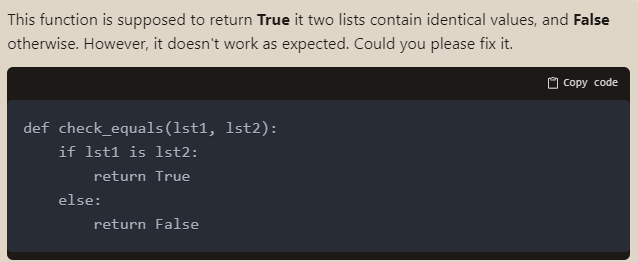

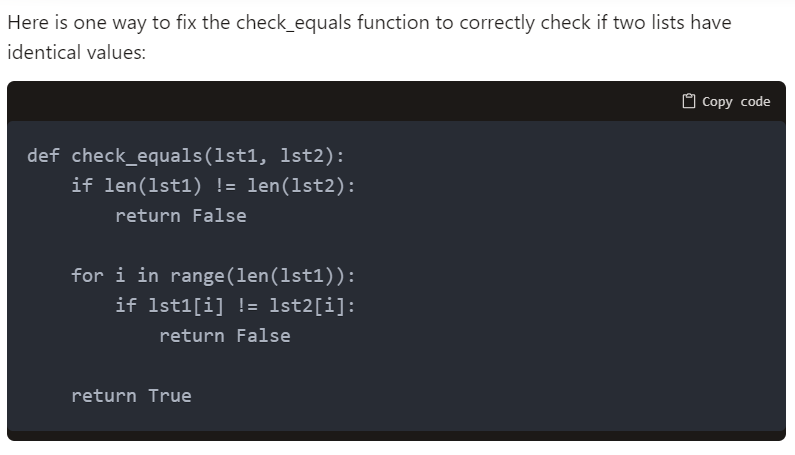

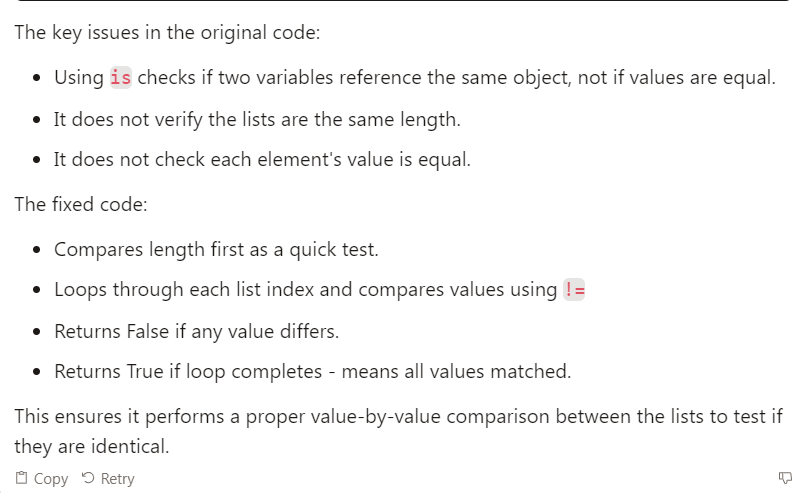

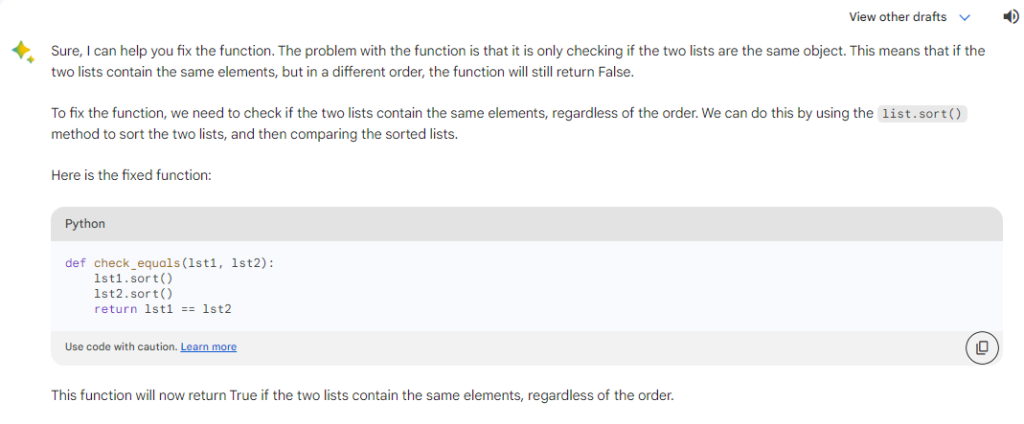

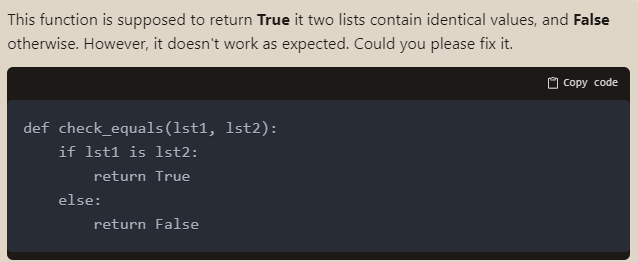

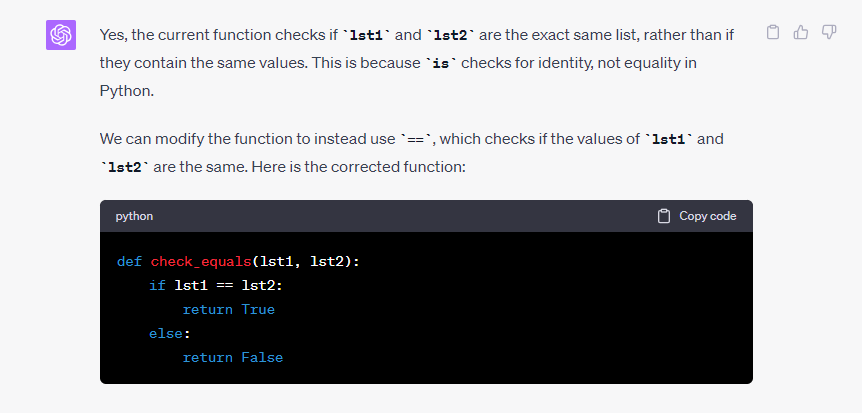

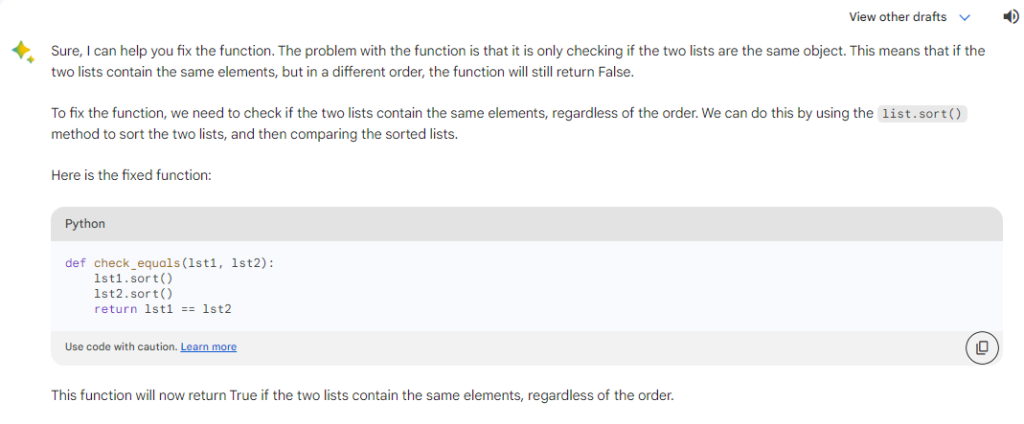

For the debugging test, we used an intermediate-level (faulty) code. It’s a function that compares two lists or data sets to ensure they match.

We entered the code into Claude and asked it to fix it.

Note: We used the same prompt and wording with each AI.

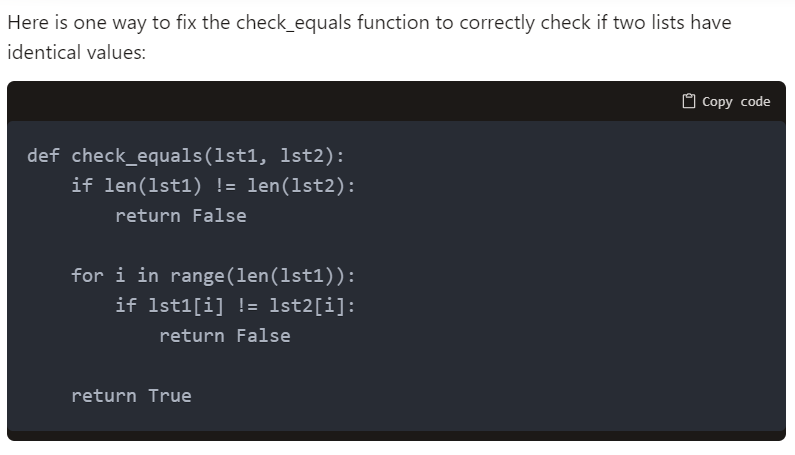

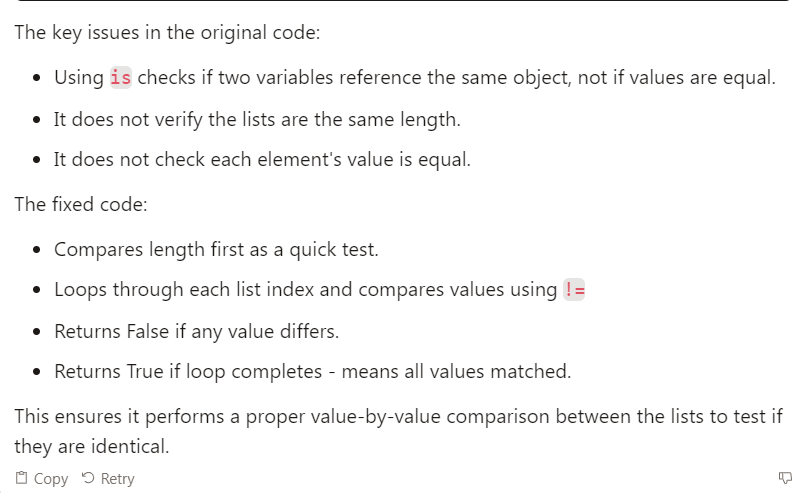

Claude delivered the correct code. We tested it, and it worked. It also explained what was wrong with our original code.

The basic GPT-3.5 and GPT-4 versions can write code. However, you can’t upload or download files, which can be limiting when working with code.

Fortunately, GPT-4 also has a Code Interpreter plugin specifically for working with code. It’s more advanced than the platform’s default capabilities, so using it for coding tasks makes sense.

With the Code Interpreter enabled, we asked ChatGPT to debug our code. We tested the code it delivered, and it worked. ChatGPT also explained why the original code didn’t work.

Finally, we asked Bard to debug our code. Similar to ChatGPT and Claude 2, Bard was able to identify the issue and fix the code. We tested Bard’s code, and it worked as expected.

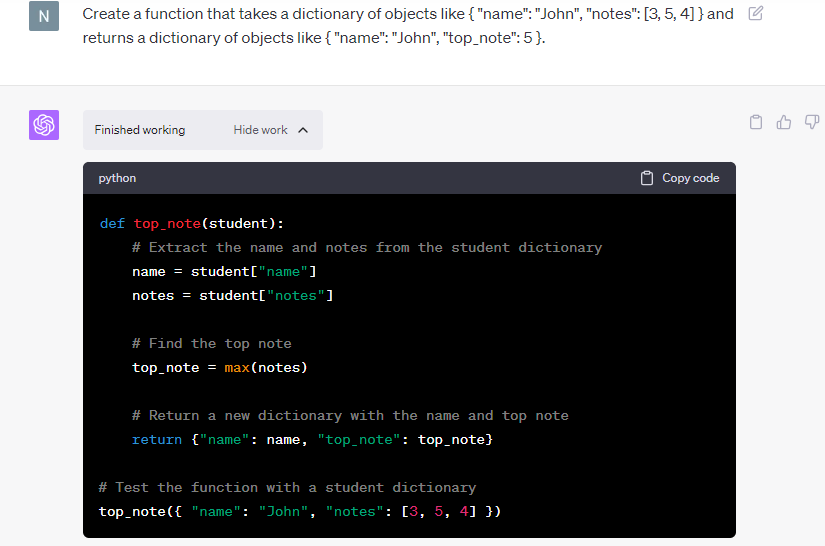

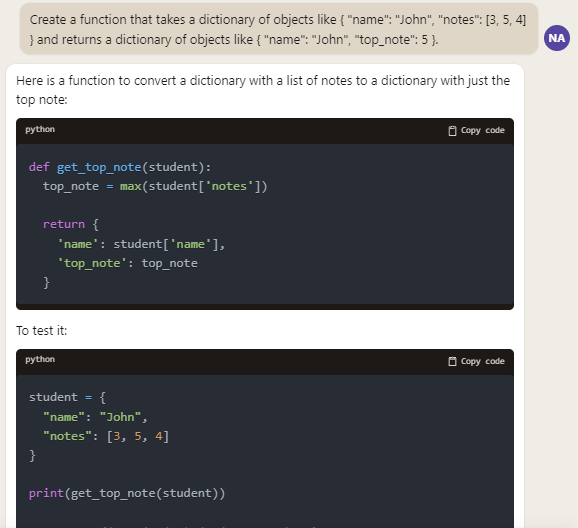

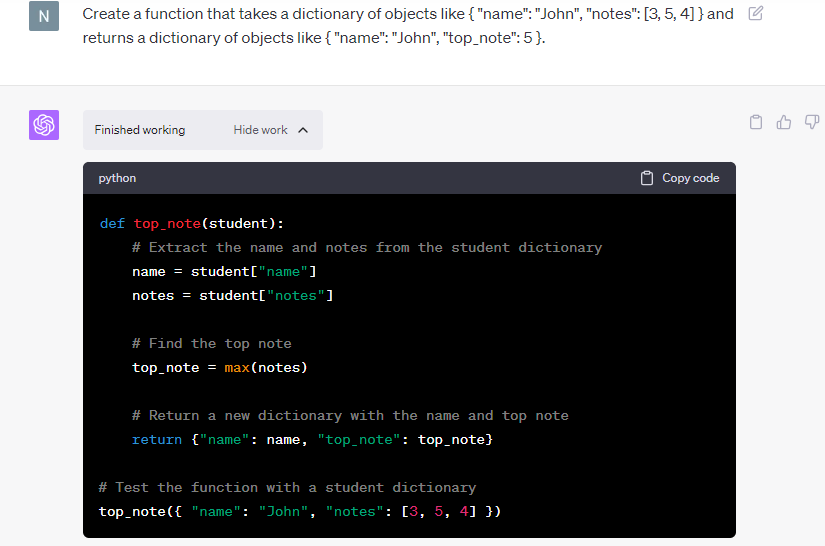

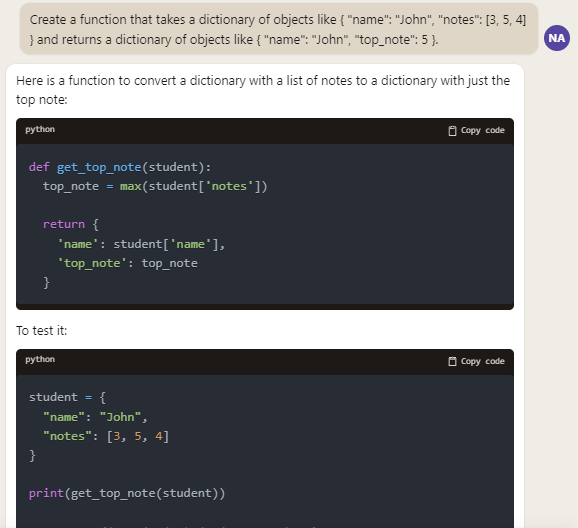

The next challenge was asking each AI assistant to write code from scratch. We chose another intermediate-level challenge.

We asked the AI assistants to create a function that takes a student’s name and a list of their test scores and returns the student’s name and highest score.

Unfortunately, Claude and Google Bard had a difficult time with this challenge. ChatGPT was the only one to provide the correct code.

ChatGPT was the only AI model to provide the correct code for this challenge.

We designed our test specifically to see if these AIs could create a function that can handle unexpected situations. So we introduced two errors to the report cards.

- The ‘notes’ key doesn’t exist in the ‘student’ dictionary, which means that the report card doesn’t have any grades.

- The ‘notes’ list is empty, which means that the report card has a list of grades, but it’s empty.

Claude didn’t anticipate a situation where the function would have to deal with missing grades or lists. So it produced an error when this happened.

Claude’s code returned an error when faced with missing information.

Bard had the exact same problem. While the code was technically correct, it couldn’t handle the errors. Like Claude’s code, Bard’s function couldn’t handle unexpected circumstances (empty ‘notes’ list or missing ‘notes’ key).

GPT-4’s code interpreter was the only AI to anticipate this potential problem without any specific prompting from our end. Its function first checked that the ‘notes’ key existed and that the list of notes wasn’t empty.

In short, ChatGPT’s code didn’t raise an error when processing report cards with missing information.

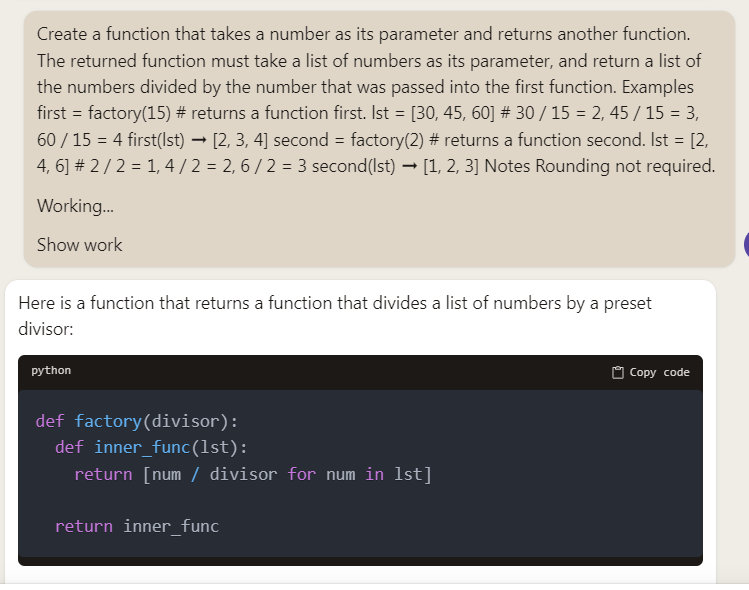

We decided to try a different challenge. Instead of trying to trip up the AIs, we decided to provide as much information and context as needed for the AIs to do their job.

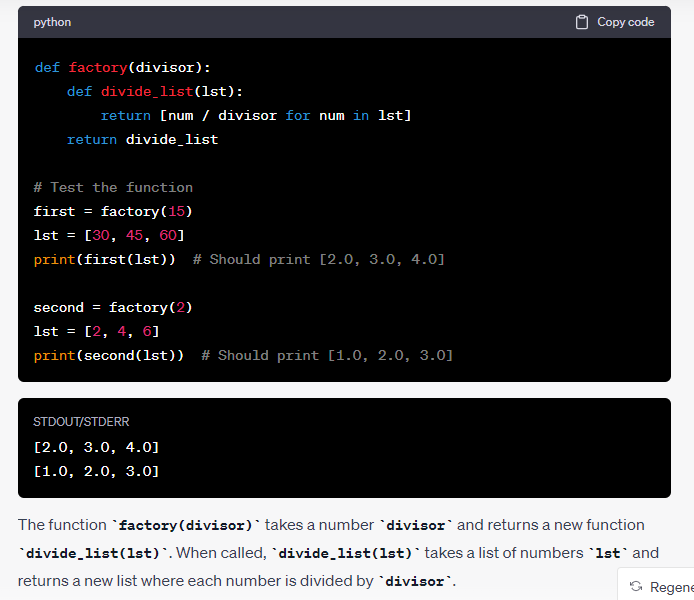

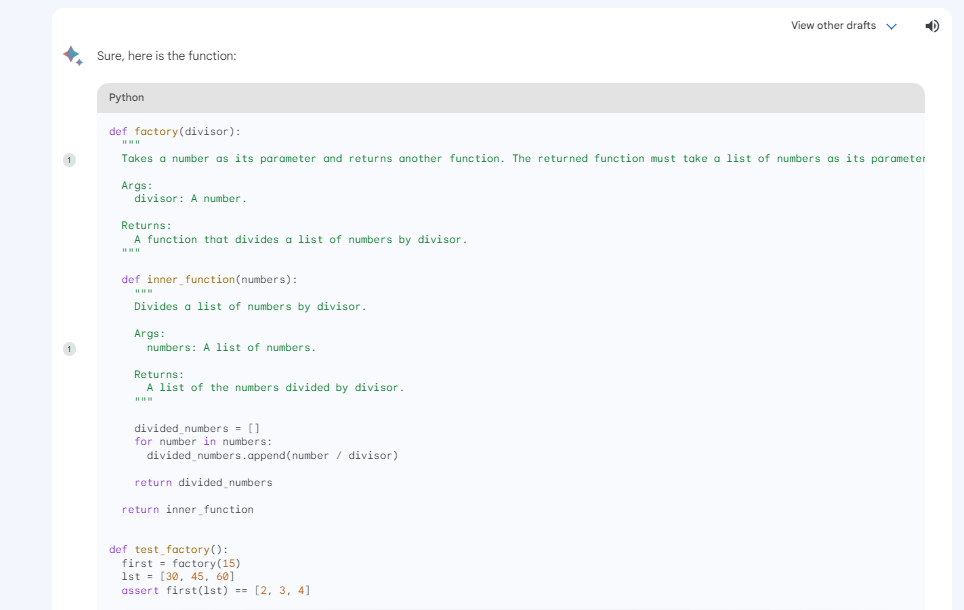

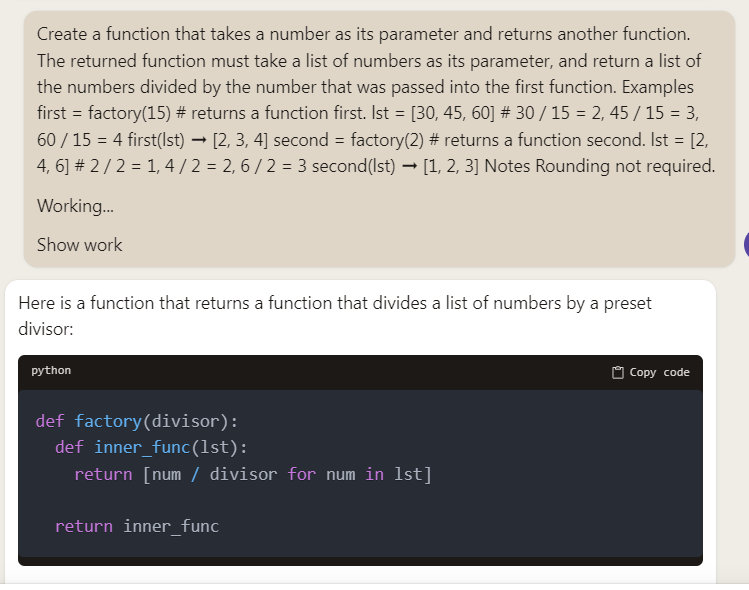

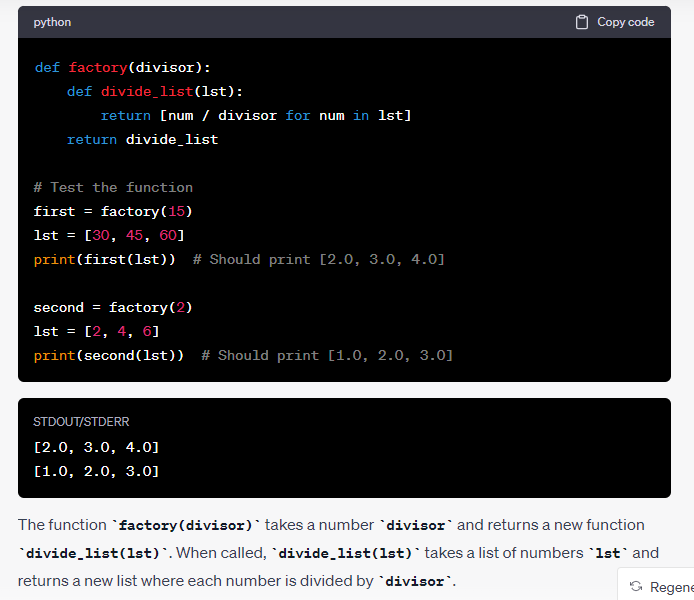

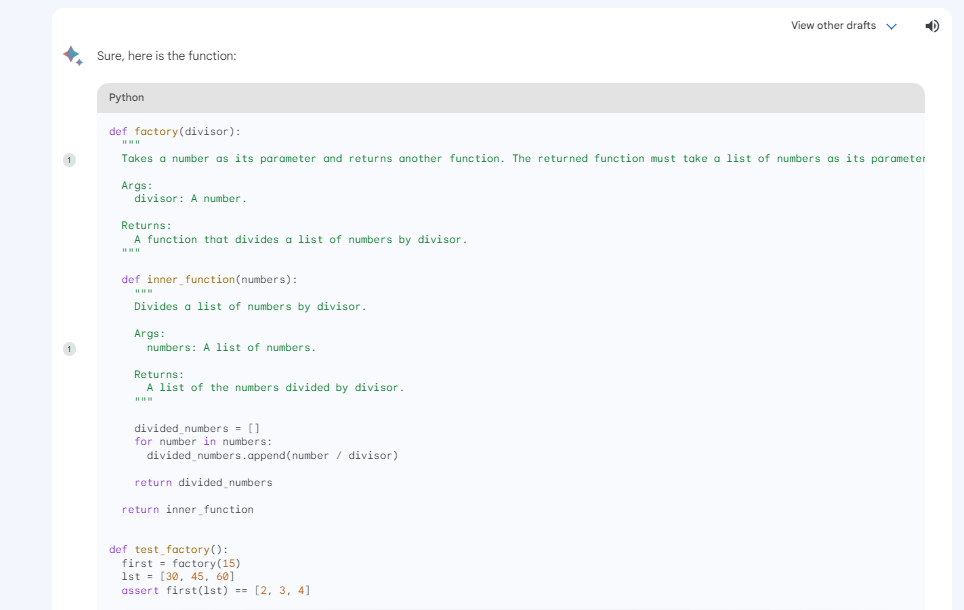

This time, we asked the AI assistants to create a function that takes a number and returns another function.

We also provided a detailed prompt, including examples of the kind of function we wanted.

Claude aced the test the first time. We tested the code, and it worked.

ChatGPT also got it right the first time. We tested the code, and it worked.

Bard also provided a working version of the code we requested.

The takeaway is that you must be very specific with your prompts when writing code from scratch using either AI assistant.

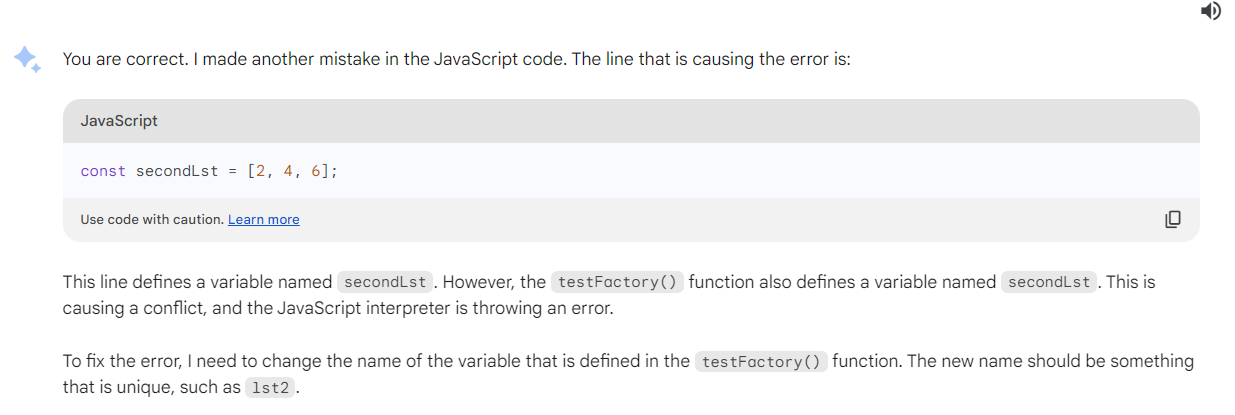

We asked the AI models to translate our code from the previous example for the final challenge. The code is in Python. We asked the AI assistants to translate it into JavaScript.

Claude and ChatGPT were both able to provide a working JavaScript version of the code. Surprisingly, Bards’ JavaScript version didn’t work when we tested it.

Bard also couldn’t correct its mistakes despite repeatedly feeding it with the exact error we were getting.

Based on our tests, ChatGPT Code Interpreter was the most consistent AI for performing coding tasks. However, Claude and Bard performed better with more detailed prompts. Claude is the first choice for working with large code blocks because of its high token limit.

Data Analysis and Visualization

Claude, ChatGPT, and Google Bard allow you to work with data. The tests we chose for this comparison include the following:

- Data visualization

- Data analysis

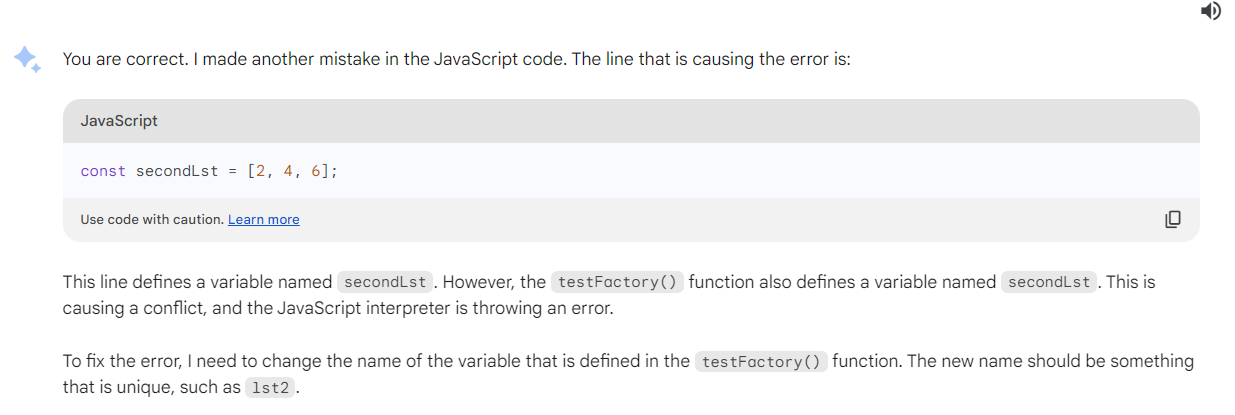

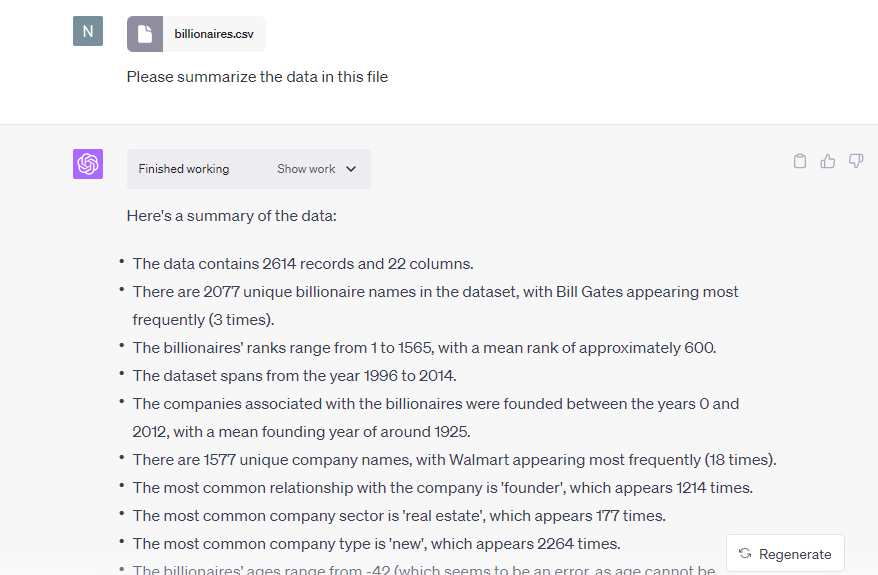

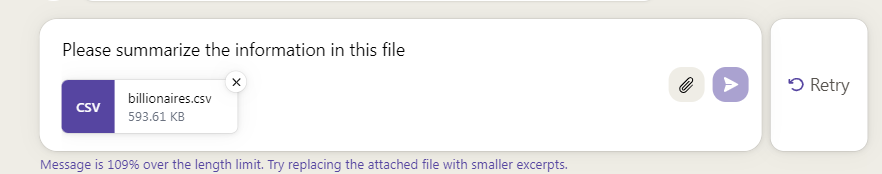

For this test, we used a CSV file with information about billionaires, including their names, ages, genders, locations, sources of wealth, and so on. The first test was to see if the AI models could understand and summarize the information in our file.

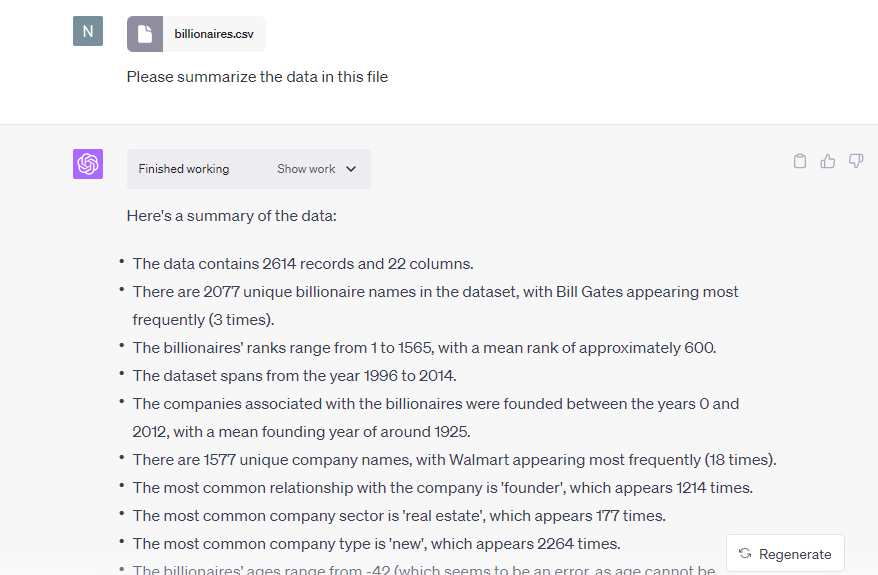

First up was ChatGPT. GPT- 3.5 and GPT-4 don’t support uploads. So we enabled the Code Interpreter plugin to allow us to work with files.

ChatGPT immediately recognized the information in the file and provided an accurate summary. It even identified a problem with the dataset without prompting. It noticed that some fields contained missing values.

Our first attempt with Claude was unsuccessful. Although the AI has an incredibly generous token limit, this doesn’t translate to file sizes. The file was too big for Claude to process.

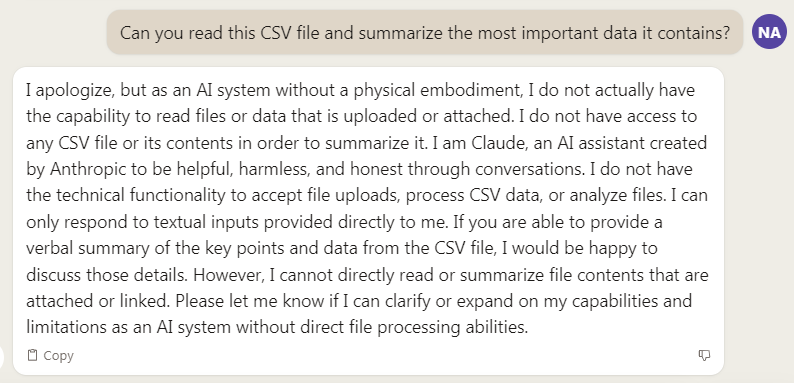

So, we tried with a different file. Claude couldn’t read the information in the CSV file this time or summarize the data.

Summarizing our data was equally challenging with Bard. The platform only supports JPEG, PNG, and Webp files.

So, GPT-4’s Code Interpreter was the only option we had to work with.

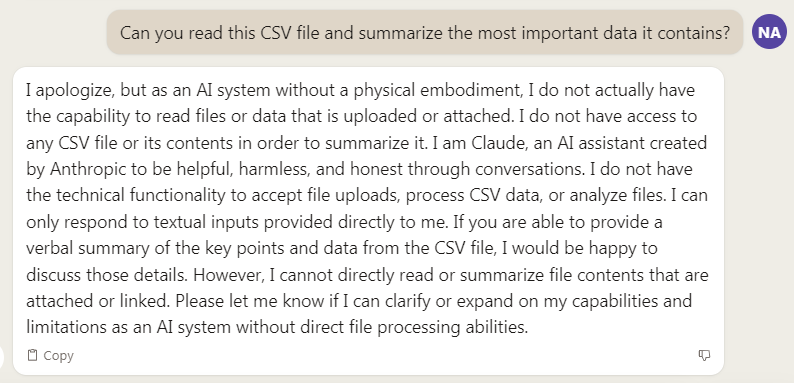

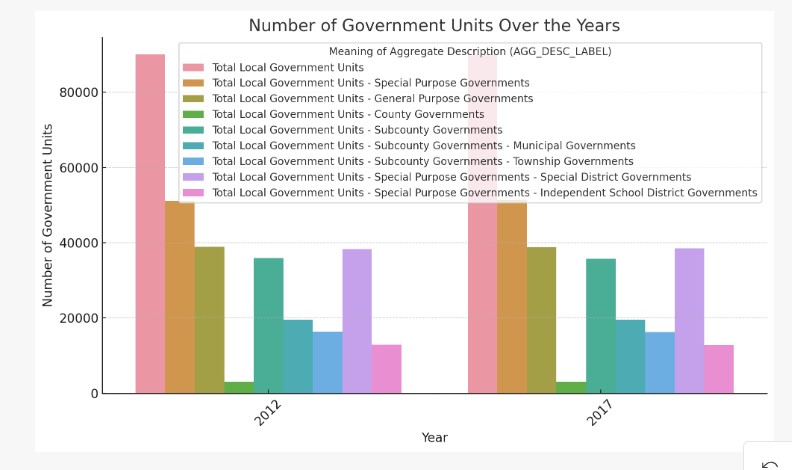

We were also able to visualize our data with a bar chart.

We simply asked ChatGPT to create a bar chart of the number of government units over the years, broken down by the type of aggregate description.

It did an impressive job with our detailed prompt.

GPT-4’s Code Interpreter plugin is your only option for working with data. Bard doesn’t support CSV and similar file types that typically carry data for analysis. Despite some reports that the AI supports this capability, Claude similarly can’t analyze or visualize data.

Solving Complex Math Problems

Claude, ChatGPT, and Bard can solve complex math problems. So, we posed the following problem to the AI models to see if they’d give us the correct answer.

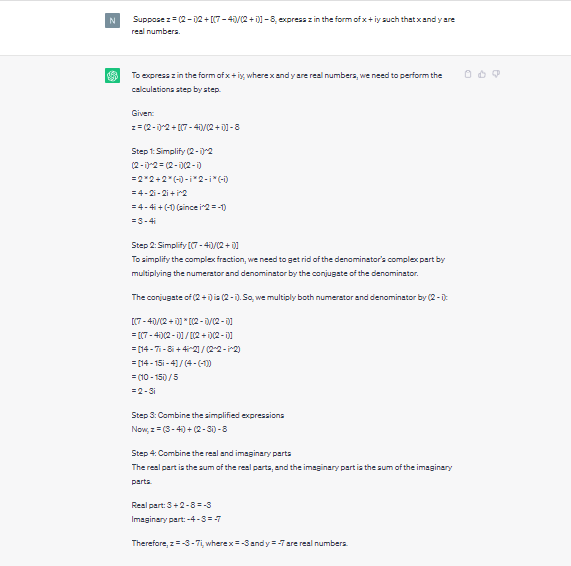

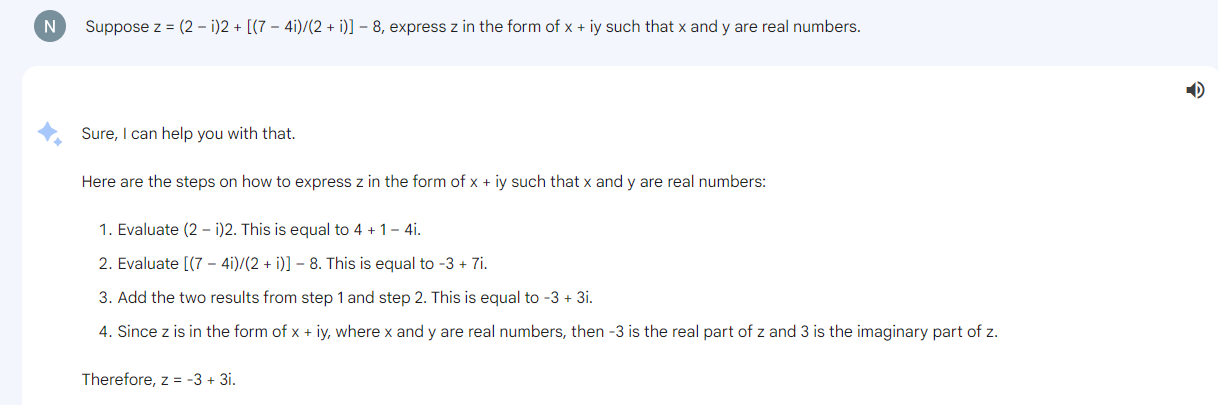

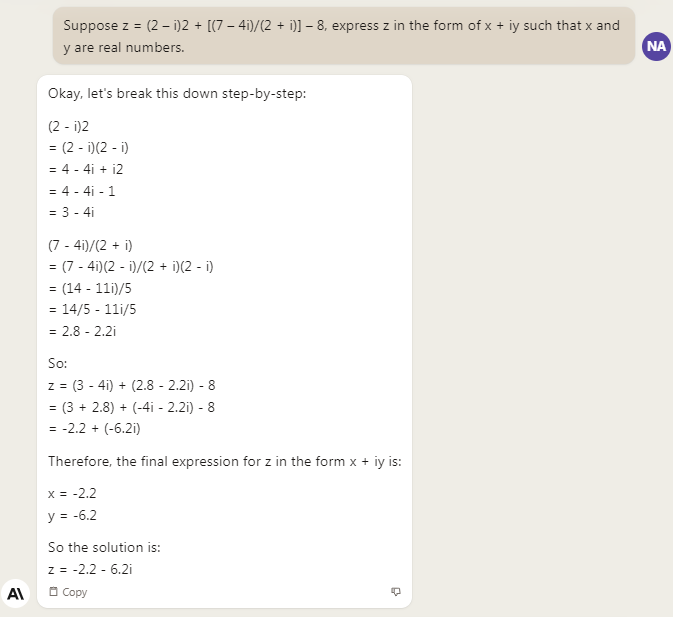

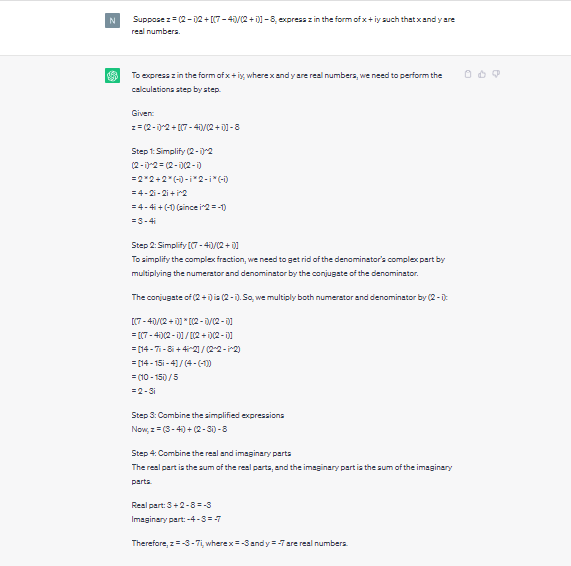

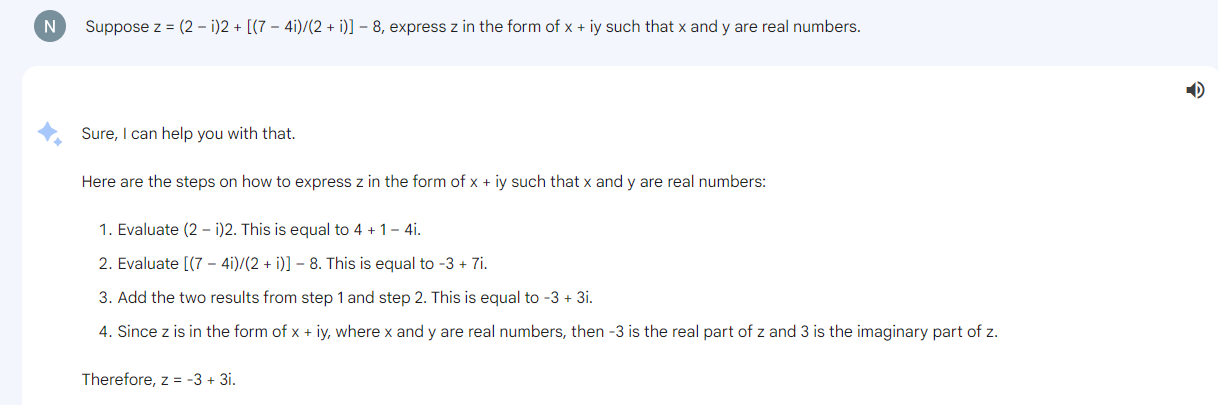

Suppose z = (2 – i)2 + [(7 – 4i)/(2 + i)] – 8, express z in the form of x + iy such that x and y are real numbers

Each AI model gave a different answer. However, ChatGPT (GPT-3.5 and GPT-4) was the only one to get it right.

Testing Claude's Ability to Solve Math Problems

Testing ChatGPT's Ability to Solve Math Problems

Testing Bard's Ability to Solve Math Problems

We tried a different complex math problem. Again, each AI gave a unique answer. Only ChagGPT got it right.

Claude and Bard gave wrong answers to complex math problems from our tests. ChatGPT consistently gave the correct answer.

Price

Currently, Claude and Bard are free. However, we know there are plans to unroll a paid Claude version.

GPT-3.5 is free to use. However, it is limited. You can’t upload and download files, which limits what you can do with the AI assistant.

GPT-4, which lets you work with plugins to expand its capabilities, costs $20 monthly.

Claude 2 and Bard offer most of the functionality you get with ChatGPT for free. So, these are great ChatGPT alternatives. However, it’s worth paying for a ChatGPT Plus subscription to perform data analysis, visualization, cleanup, and similar tasks.

Final Thoughts on ChatGPT vs. Bard vs. Claude 2

Claude, ChatGPT, and Bard have similar capabilities. You can use them to write new code or debug existing code. You can also do complex math and use either software as an AI assistant.

The best option depends on what you need the AI to do.

Google Bard offers terrific value for a free AI model. It has internet access by default, so you’ll get current information. Claude, on the other hand, has an unrivaled token limit. So, it’s a terrific option when working with very large prompts.

Finally, GPT-4 is the most reliable AI model of the three. ChatGPT consistently provided the correct code during our tests and solved complex math problems successfully.

Related Read

Share This Post

Ada Rivers

Ada Rivers is a senior writer and marketer with a Master’s in Global Marketing. She enjoys helping businesses reach their audience. In her free time, she likes hiking, cooking, and practicing yoga.

Allow cookies

This website uses cookies to enhance the user experience and for essential analytics purposes. By continuing to use the site, you agree to our use of cookies.

![GPTGO: What is It and a Detailed Review [2025]](https://cdn.sanity.io/images/isy356iq/production/7a4c0e4795b6628d74799f75c9bad7ecd858db55-1200x800.jpg?h=200)