BerriAI

AI platform for accessing LLM APIs and OpenAI models

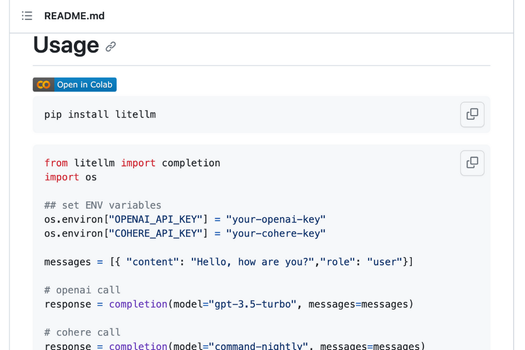

Accesses various LLM APIs using OpenAI format.

Offers language completion, embedding, and moderation functions.

Integrates with multiple language models and platforms for efficient operation.

Pricing:

Features:

What is BerriAI

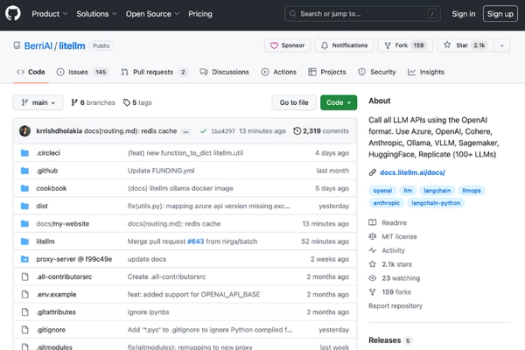

BerriAI is a GitHub repository that provides an AI-powered platform called LiteLLM. LiteLLM enables users to call various AI models, including Bedrock, Azure, OpenAI, Cohere, Anthropic, Ollama, Sagemaker, HuggingFace, and Replicate, using the OpenAI format. It offers features such as 1-click OpenAI proxy, streaming, openAI proxy server, self-hosting server, and support for different providers. The repository also includes documentation, resources, and contributions from various contributors.

Key Features

- Completion: Offers a function for language model completions.

- Embedding & Moderation: Provides embedding and moderation functions.

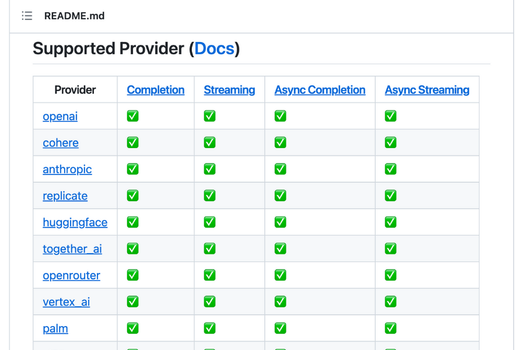

- Supported Models & Providers: Supports various models and providers.

- OpenAI Proxy Server: Features a proxy server for OpenAI.

- Budget Manager: Manages budget for API requests.

- Azure API Load-Balancing: Load-balances API requests on Azure.

- Setting API Keys, Base, Version: Configures API settings.

- Completion Token Usage & Cost: Manages token usage and cost for completions.

- Logging & Observability: Provides logging and observability features.

- Caching: Features caching capabilities.

- LangChain, LlamaIndex Integration: Integrates with LangChain and LlamaIndex.

- Migration: Offers migration capabilities.

BerriAI

AI platform for accessing LLM APIs and OpenAI models

Key Features

Links

Visit BerriAIProduct Embed

Subscribe to our Newsletter

Get the latest updates directly to your inbox.

Share This Tool

Related Tools

Allow cookies

This website uses cookies to enhance the user experience and for essential analytics purposes. By continuing to use the site, you agree to our use of cookies.