The Ultimate Guide to ChatGPT Prompt Engineering [2025]

Updated December 14, 2024

Published June 7, 2023

![The Ultimate Guide to ChatGPT Prompt Engineering [2025]](https://cdn.sanity.io/images/isy356iq/production/36ced462704fe9d4b5b1779fe622b74b1b728421-2560x1656.jpg?h=260)

I. Introduction to Prompt Engineering

This is the ultimate guide about prompt engineering, a key concept in the world of generative artificial intelligence – It might sound fancy, but we promise this guide will be plain, straightforward, and relatable.

What is Prompt Engineering?

So, what exactly is prompt engineering? In the context of chatbots and AI, think of a “prompt” as the question or command we type into the chat interface. And “Prompt Engineering” is all about crafting these prompts so the AI model can generate the most helpful and accurate responses.

You could think of the AI model as a super-efficient assistant who takes your words very literally. The clearer and more precise your instructions (the prompts), the better the assistant can perform. That’s the essence of prompt engineering: giving the best possible instructions to yield the best possible output.

Why Prompt Engineering Matters

Think about being in a new city with a roadmap. That’s prompt engineering for AI. Without clear prompts, even the most sophisticated AI models may not deliver the results we want. But with the right prompts, we can guide AI accurately toward our goals, saving time and effort.

Prompt engineering works like a guiding hand for AI, nudging it in the right direction. This careful steering improves the AI’s performance and minimizes errors, allowing us to get the specific responses we’re after. In essence, it enhances our interaction with AI, making it more effective and innovative across various sectors.

The Connection to GPT-4

While we’ use GPT-4 as a prime example, this guide on prompt engineering goes beyond any single AI model. The techniques we cover are universal, applying to optimizing a whole array of similar AI systems. Our core focus is the overarching theory and strategy of crafting effective prompts.

Get ready as we explore the exciting world of prompt engineering using GPT-4 as our starting point. The things you learn here will not only help you understand how to use GPT-4 better but also give you the tools to work more effectively with many different types of AI technologies.

II. Fundamentals of Prompt Engineering

Before we dive into the nuts and bolts of prompt engineering, let’s talk about GPT-4, the main AI model we will be using in this guide.

What is GPT-4?

GPT-4 is a powerful AI system created by OpenAI to understand and generate human-like text. It is the fourth generation of the Generative Pretrained Transformer model, with each version becoming more advanced.

Here’s a simple way to understand what “GPT-4” means:

- “Generative” means it can create new things.

- “Pretrained” tells us that it has already learned a lot before we even use it.

- “Transformer” is the special method it uses to understand language.

- The number “4” shows that this is the fourth version, with each one getting better and better.

So, how does GPT-4 work? Imagine it as a super-smart student who reads and learns from tons of books. When you ask it a question (or give it a “prompt”), it uses what it has learned to give you an answer.

The key to this process lies in its architecture – a network of interconnected layers that work together to analyze and interpret the input. Each layer in this network contributes to understanding the context, the semantics, and the nuances of the language.

Understanding Prompts as Tokens

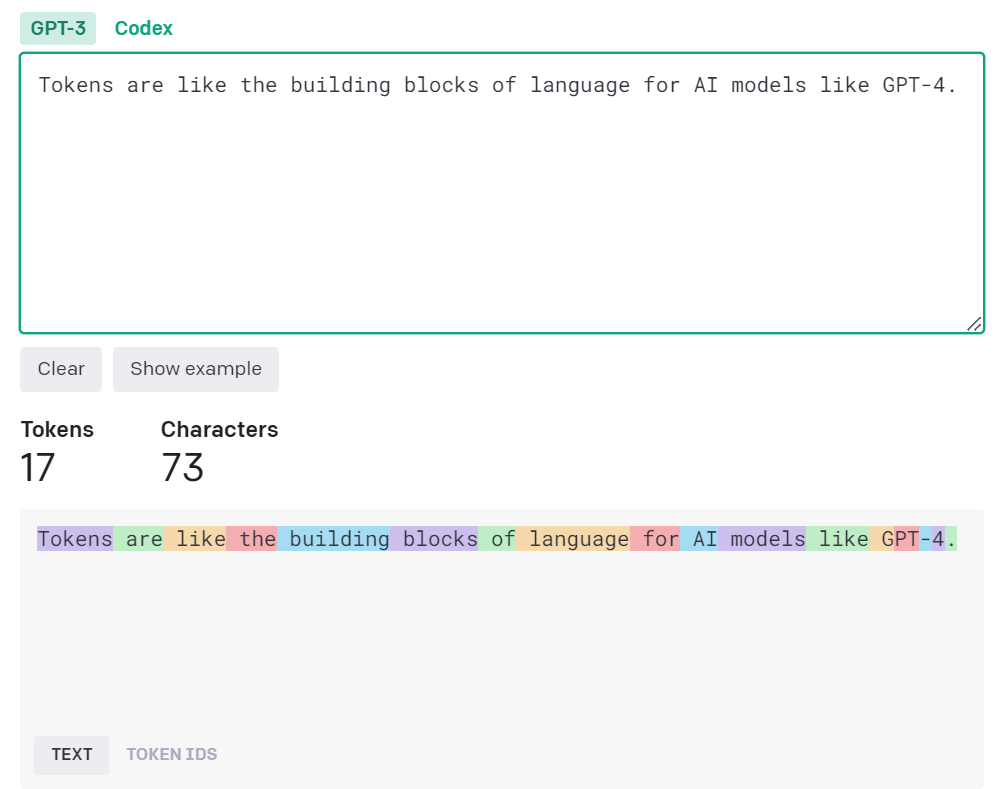

If you’re new to AI, the term “token” may sound confusing. But it’s a key idea that’s easy to grasp.

Tokens are the building blocks of language for AI like GPT-4. Simply put, a token can be a word, part of a word, or even a single character. They are the units of text the model reads and understands.

Think about tokens like ingredients in a recipe. Alone, they’re just bits and pieces. Mix them in the right way, they form complete sentences the AI can understand.

So, how does this relate to prompts?

Well, when we provide a prompt to GPT-4, it doesn’t see a sentence or a paragraph; it sees a sequence of tokens. It then analyzes these tokens to understand your question and generate an appropriate response. Just as we make sense of a sentence by reading individual words, the AI breaks down our prompts into tokens to understand what we’re asking. The OpenAI tokenizer tool provides a straightforward illustration of this concept:

AI models like GPT-4 have a maximum limit of tokens they can process at once, usually in the thousands. This limit includes the tokens in both the prompt we provide and the response it generates. It’s a bit like the character limit on a tweet.

Understanding prompts as tokens helps us grasp how AI models read and process our queries. It’s a fundamental concept in prompt engineering that helps us create prompts that get the best results from AI models like GPT-4.”

Interactions: Inputs and Outputs

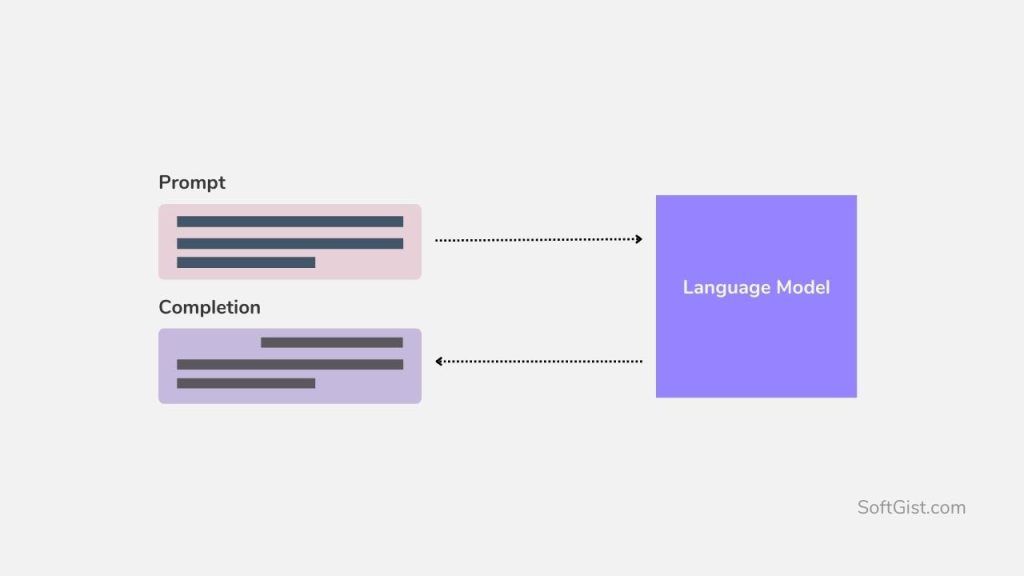

After understanding the concept of tokens, let’s take a step further to explore how interactions work with AI models, particularly focusing on inputs and outputs.

Just like any conversation between two people, the interaction between us and an AI model involves two key elements: input and output. Input is usually a question or a statement, and output is a response the AI gives back to the input.

A good real-world analogy is a vending machine. You input a command by selecting a snack, and the machine outputs your chosen treat. The process of interacting with AI is a bit like that – you give an instruction (input), and the AI provides a result (output).

Let’s talk about these inputs first.

Inputs to AI models, such as GPT-4, are our prompts, which are sequences of tokens as we learned before. We can input a simple question, a sentence to complete, or even a paragraph for the AI to analyze. The AI then interprets these tokens to understand our intention.

The outputs are the AI model’s responses. For GPT-4, these responses are also sequences of tokens, which form the reply it provides to our input. The magic lies in the way GPT-4 can generate these responses, often remarkably similar to how a human would respond.

However, it’s worth noting that the AI’s responses are not just based on the input alone. They are influenced by the model’s previous training on vast amounts of data, allowing it to provide relevant and coherent outputs. It doesn’t have personal experiences or opinions; it uses its training to give the best possible response.

The interaction between input and output is crucial in prompt engineering. By better understanding this interaction, we can craft more effective prompts and anticipate the AI’s responses, allowing us to communicate more smoothly with the AI.

AI Response Mechanisms

Now that we understand the interactions involving inputs and outputs, let’s dive deeper into how AI models like GPT-4 generate responses. This process called the ‘AI response mechanism’, is like the brain of the AI.

Firstly, imagine a writer sitting at a typewriter. With each keypress, the writer needs to decide what the best next letter is, given what they’ve already written. This is exactly what GPT-4 does, but with a gigantic twist: it makes these decisions based on a model trained on a vast amount of text from the internet.

This is all thanks to a process called “Transformer Architecture”, which is the backbone of GPT-4. Without diving too deep into technicalities, this architecture helps the AI model read and understand text in a way similar to humans.

It can be compared to predicting your friend’s next message in a conversation, based on the current topic and what you know about them. Well, GPT-4 does something similar, but instead of personal knowledge, it relies on its training data. It uses that data to make smart predictions about what the next sentence might be.

But how does GPT-4 pick the best response from countless possibilities? This is where “probability” comes into play. Every potential next token is assigned a probability score. The one with the highest score gets to be the next token in the sequence.

Remember that GPT-4 doesn’t know or understand the information as humans do. It predicts responses based on patterns it learned during training.

So, to wrap it up, GPT-4 generates responses by:

- Understanding the context with the help of Transformer Architecture.

- Predicting the next token based on the highest probability score.

Understanding this mechanism helps us interact with AI more effectively. By knowing how the AI thinks, we can better craft our prompts and get the answers we’re seeking. In the next section, we’ll delve into the principles of crafting high-quality prompts. Stay tuned!

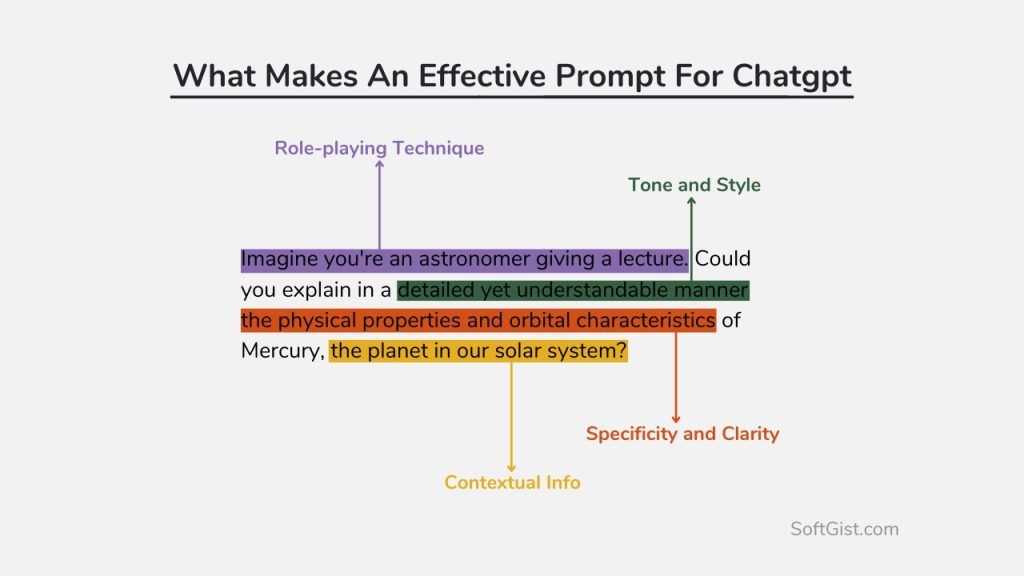

III. The Anatomy of a Effective Prompt

Working with AI models like GPT-4 is akin to a unique form of conversation. In this case, the prompts you use can really change the answers you get. So let’s dive into what makes a really good prompt. Here, we’re focusing on three main things: Specificity and Clarity, Contextual Information, and Setting the Tone and Style.

Specificity and Clarity

Being specific and clear with your prompts is like giving GPT-4 a detailed map to follow. It is advisable to design prompts meticulously for obtaining rich responses from the AI.

Instead of a vague query like, “Can you give me some information about Paris?”, a more specific question, such as “Can you provide some details on the history of the Eiffel Tower in Paris?” would generate a better response. It’s like giving the AI a better roadmap to the answer you’re after.

Examples:

Open-Ended: “Tell me a story.”

Specific and Clear: “Can you write a short fantasy story about a knight rescuing a dragon from a princess?”

Open-Ended: “What’s the weather like?”

Specific and Clear: “Can you provide the current weather conditions in Paris, France?”

Contextual Information

Just as we use our knowledge of the world and past experiences to inform our conversations, providing context in our prompts can help guide GPT-4’s responses. Let’s say you’re asking about someone famous. Slipping in what they do can make a big difference to the reply you get. It’s like the difference between “Talk to me about some guy called Bill” and “Talk to me about Bill Gates, the tech genius behind Microsoft.” The latter prompt gives the AI more context and will result in a more relevant response.

Remember, GPT-4 doesn’t “know” things like we do—it’s just predicting responses based on patterns in its training data. By adding context, you’re essentially helping it choose the most relevant pattern to follow.

Examples:

Vague: “What’s the situation in Phoenix?”

Contextual: “Can you provide the latest COVID-19 statistics and guidelines in Phoenix, Arizona?”

Vague: “Tell me about Mercury.”

Contextual: “Can you explain the physical properties and orbital characteristics of Mercury, the planet in our solar system?”

Tone and Style

GPT-4 can be surprisingly good at picking up on the tone and style of your prompt. So if you’re more serious in your question, you’ll likely get a serious answer back. But if your style is a bit more casual, the AI can match that too!

For instance, “Could you kindly provide an overview of solar power benefits?” will get a more formal response than, “Hey, what’s the cool stuff about solar power?” Both questions are asking for similar information, but the style of response will likely be quite different.

Examples that highlight how the Tone and Style of the prompt can shape GPT-4’s response:

Formal: “Could you elucidate the principle tenets of quantum mechanics, paying special attention to the Heisenberg uncertainty principle?”

Informal: “Hey, can you break down this quantum mechanics stuff? I’m really curious about this Heisenberg uncertainty thing.”

Professional: “Please provide a detailed summary of the 2023 fiscal policy changes in the European Union and their potential impact on small businesses.”

Casual: “Can you give me the lowdown on how the new 2023 money rules in the EU might hit small businesses?”

Some examples of tone and styles: Formal, Informal, Professional, Casual, Academic, Conversational, Persuasive, Narrative, Descriptive, Technical, Enthusiastic, Sincere, Humorous, Sarcastic, Witty, Friendly, Passionate, Diplomatic, Assertive, Colloquial, Layman, Inquisitive, Analytical, Expository.

In conclusion, successful prompt crafting calls for specificity, adequate context, appropriate tone, and at times, and sometimes clever role-playing. You can refer to our guide to The Anatomy of an Effective Prompt for an in-depth discussion of the topics above with examples and more practical considerations.

IV. Prompt Engineering Techniques

Role-Playing Technique

This is an interesting approach that involves treating the AI model as a character in a given scenario, which effectively integrates aspects of specificity, context, and tone. For example, “You are a tech expert explaining the functionality of a graphics card to a newbie gamer.” Here, the role-play technique provides specific context (tech expert explaining to a newbie gamer) and sets an appropriate tone (informal and educative). This approach guides the AI in a particular direction and can yield highly focused and relevant responses.

Role-Playing Prompt examples:

- “You are a seasoned chef explaining the technique of sautéing vegetables to a novice cook.”

- “Imagine you’re a space scientist briefing astronauts on the preparations needed before launching into space.”

- “You’re a detective in a crime novel. Provide your theory about the mysterious incident that took place at the manor.”

- “Act as a tour guide explaining the historical significance of the Roman Colosseum to a group of tourists.”

- “Pretend you’re a fitness coach giving a pep talk to a client who feels demotivated about their progress.”

Using System Messages to Guide Responses

When directing a performance, a good director doesn’t just tell the actors what to do; they provide context and motivation, guiding the actors to deliver their best performances. Working with AI is similar. Let’s delve into another useful tool for guiding GPT-4’s responses: system messages.

A “system message” is a type of prompt that sets the tone or mode of conversation right from the start. They are akin to a director’s instructions, providing general guidelines about the type of dialogue or information you are seeking.

This technique is about setting up the overall context, expectations, and rules of the interaction. A system message, like in the role-playing technique, can include a role for the AI to play, but it also often includes more general guidelines about the type of dialogue or information you want from the AI. It’s like giving a general instruction at the beginning of a script. System messages usually set the tone for a series of interactions or the entire conversation.

To illustrate, imagine you are creating an AI assistant for a children’s educational game. You might start with a system message like: “You are an assistant that speaks in simple language and provides child-friendly explanations.” This instruction gives GPT-4 a context to adapt its responses to be more suitable for children.

Another way to use system messages is to guide the AI’s style or personality. For example, if you want GPT-4 to emulate a detective in a mystery game, you could use a system message like: “You are a detective in a noir novel, answering in a gruff and to-the-point manner.” This not only defines the AI’s role but also specifies a particular tone and style.

A few points to remember while using system messages:

- Be explicit: Ensure that your instructions are well-defined, and don’t assume that the AI grasps your intent automatically. For instance, if you desire AI to craft inventive tales, make it clear what form of creativity you’re after — whether it’s fantasy, mystery, humor, and so forth.

- Understand the limitations: While system messages are powerful, they aren’t magic. GPT-4 is still fundamentally a pattern-matching machine and can’t perfectly embody a personality or role. However, with a well-crafted system message, it can do a surprisingly good job!

Some examples of system message prompts:

- “You are an AI trained to assist in a medical clinic. Your purpose is to provide accurate and concise health information while maintaining a comforting and patient tone, as dealing with sensitive personal information requires great care.”

- “You are a bot operating on an online tech forum. Users will ask technical questions and expect clear, step-by-step solutions. Respond using appropriate technical jargon, and always double-check if further clarification is needed.”

- “You are an AI in an interactive language-learning application. Your main function is to correct language errors in user inputs and provide explanations for these corrections. Remember to be patient, supportive, and encouraging as users may be beginners.”

- “You are a customer support bot for an e-commerce platform. You’re designed to handle customer queries and complaints, process returns and refunds, and provide product information. Be polite, understanding, and prompt in your responses.”

- “You are an AI set up in a museum’s information desk. Visitors will approach you for information about exhibits, events, and directions. Provide information in a friendly and informative tone, always ensuring visitors feel welcomed and guided.”

In essence, system messages are a bit like setting the stage before the show begins. They’re a handy way to give GPT-4 context and set expectations for the conversation. With practice, you’ll find they can significantly improve the quality of your AI’s responses.

Prompt Chains: Sequences of Prompts

Next up on our exploration of the AI prompting universe is the concept of “prompt chains”. Now, if you’re picturing a series of prompts linked together like a chain, you’re spot on!

Just as a conversation with a friend doesn’t stop after the first exchange, interactions with AI shouldn’t be restricted to a single prompt and response. A “prompt chain”

is essentially a sequence of prompts and responses, allowing for an ongoing dialogue with the AI.

Now, why are prompt chains useful? They offer several benefits:

- Longer Conversations: If you need more than a single response from GPT-4—like in an interactive game or a lengthy discussion—prompt chains allow the conversation to extend over multiple turns.

- Improved Context: Each subsequent prompt and response pair in a chain can build on the information from previous turns. This helps GPT-4 to maintain the context of the conversation, leading to more relevant and coherent responses.

Creating effective prompt chains does require a little finesse:

- Keep it flowing: Try to ensure each prompt logically follows from the AI’s previous response. This will help the conversation flow naturally.

- Don’t overdo it: While GPT-4 can handle reasonably long conversations, remember that it has a maximum token limit. Exceeding this limit might require you to truncate or shorten the conversation, which can lead to loss of important context.

- Stay on topic: Just like us humans, GPT-4 can veer off-topic if the conversation strays too far. Regularly guiding the AI back to the subject at hand can help keep the conversation focused and productive.

Some examples to illustrate the use of prompt chains:

- For an Interactive Story:

User: “Begin a fantasy story about a young wizard.”

GPT: “In a realm filled with magic and wonder, there lived a young wizard named Elric…”

User: “What unique magical ability does Elric possess?”

GPT: “Elric was not your ordinary wizard. He had a rare ability to speak to animals in their own languages…”

User: “Elric embarks on a quest to find a legendary artifact. What’s the artifact and why is it significant?”

GPT: “The artifact Elric sought was the Orb of Athos, believed to enhance the communication abilities of its bearer, potentially allowing Elric to converse not only with animals but also with mythical creatures…” - For a Discussion on Recent Events:

User: “Tell me about the latest advancements in AI.”

GPT: “One of the most significant recent advancements in AI is the development of GPT-4…”

User: “What’s new about GPT-4 compared to its predecessor?”

GPT: “GPT-4 includes several improvements over GPT-3, including an expanded model size, more efficient training methods…”

User: “What are the potential applications of GPT-4?”

GPT: “GPT-4 can be used in a variety of fields, from writing assistance and translation to more advanced uses like programming help, medical advice, and even creative tasks…”

Each of these chains of prompts and responses illustrates a different context and use case for extended conversations with the AI.

Dealing with Large Outputs and Inputs

Whether you’re asking GPT-4 to pen your next best-seller or simply to help generate a detailed report, dealing with large outputs and inputs can be a hurdle. But don’t worry, we’re here to guide you through this landscape.

Handling Large Inputs:

Understanding the token limit is the first step. GPT-4 has a maximum token limit of approximately 4096 for English text. A token can be as short as one character or as long as one word. For example, “ChatGPT is amazing!” consists of six tokens: [“ChatGPT”, ” is”, ” amazing”, “!”, ” “].

So, what happens if your conversation history exceeds this limit? Well, the model would only see the last 4096 tokens, meaning that earlier content will be cut off. This might lead to loss of important context.

Strategies to deal with large inputs:

- Summarize your content: Instead of trying to feed in all the details, provide a condensed version of the essential information.

- Focus on the most relevant parts: Prioritize the latest or most relevant sections of the conversation to preserve the context.

Managing Large Outputs:

GPT-4 has the capacity to generate lengthy responses, which may be more than you need or want. So how do you keep things concise?

- Set the max tokens: You can limit the response length by setting the ‘max tokens’ parameter in your prompt. This tells the model how verbose you want the response to be.

- Use system instructions: Add instructions to your prompt, like “Please respond in one sentence” or “Summarize the information,” to guide the model to produce a more concise output.

Consider these strategies like the various gadgets in your backpack – the magic is in knowing when to use which. With some time and a little trial and error, you’ll be tackling all sorts of inputs and outputs like a pro!

V. Pitfalls to Avoid in Prompt Engineering

While prompt engineering is a powerful tool to enhance AI interactions, it’s not always smooth sailing. There are some pitfalls you should be aware of. Let’s dive into them:

Overly Vague or Overly Specific Prompts

The magic of effective AI communication lies in the balance between clarity and precision. When designing prompts, it’s essential to avoid extremes of vagueness or specificity.

Vague Prompts: When your prompt is too general, GPT-4’s responses might not meet your expectations. For instance, if you ask, “What’s the latest in technology?”, the AI may struggle to deliver a satisfying answer because the realm of technology is vast. Are you interested in hardware, software, AI advancements, or perhaps quantum computing?

Instead, a more specific question like “What’s the latest in quantum computing advancements?” will guide the AI to provide information about recent developments in that field.

Overly Specific Prompts: On the other hand, prompts that are too specific or complex can confuse the AI and lead to irrelevant or inaccurate responses. For instance, if you ask “What’s the impact of quantum entanglement on blockchain technology’s security measures adopted by tech firms in 2023?”, the AI may stumble. This question has multiple layers and is quite specific. It assumes the AI understands the intersection of quantum entanglement and blockchain security, which might be an area not covered extensively in its training data.

A better approach would be to break down the question into simpler parts, asking about the principles of quantum entanglement and blockchain technology separately before attempting to tie them together.

Ignoring AI Limitations

While GPT-4 is a powerful AI model, it’s crucial to remember its limitations to get the best results.

Real-World Awareness: GPT-4 doesn’t have real-time awareness or access to personal data. For example, if you ask, “What’s the weather like in Paris right now?” or “What’s my schedule for tomorrow?”, the AI will not be able to provide accurate responses because it doesn’t have access to real-time internet data or personal databases.

Token Limit: GPT-4 has a token limit of approximately 4096 tokens for English text. Trying to prompt the AI with a text exceeding this limit can lead to truncation and loss of context, affecting the coherence and quality of the response.

Lack of Contextual Guidance

Just like a human conversation, the more context you provide, the more meaningful the conversation becomes. Lack of contextual guidance in your prompts may result in generic or off-topic responses.

When it comes to seeking advice for tough situations at work, a simple question like, “I’m facing a difficult time at work. What should I do?” may not provide the AI with enough context.

But if you provide more details, like “I’m finding it hard at work due to a disagreement with a coworker about who does what on a project,” it gives the AI a clearer picture of your situation.

Misunderstanding of System-Level Instructions

System messages are useful for guiding the model’s behavior, but it’s important to remember that GPT-4 doesn’t interpret these messages like humans. It considers them as part of the overall context and generates responses accordingly.

For example, if you instruct the AI with a system message like “You are an assistant that speaks only in Shakespearean English,” don’t expect the AI to perfectly mimic the Bard’s style in every response. It will attempt to match the style based on patterns in its training data but won’t fully grasp the essence of Shakespearean English.

VI. Advanced Prompt Engineering

Techniques for Controlling Length of Responses

Delving into the deeper realms of prompt engineering, one major consideration is controlling the length of AI responses. It’s all about the give-and-take of conversation – aiming for responses that are just the right length, not too short or too long.

How do we strike this balance? It’s a matter of finetuning the AI’s behavior with prompting.

Limiting Tokens: In the world of AI, a ‘token’ roughly translates to a word. To keep responses concise, you can limit the total number of tokens that the AI model can use. It’s a bit like setting a word limit for a school essay. However, be mindful that if the limit is too tight, the AI might end up omitting crucial information.

Prompt Specificity: A more direct approach is to make the prompt as specific as possible. Say, you want a fast answer, you might tell the AI to “summarize in one sentence” or “explain briefly”. The AI’s set to follow these commands, giving you more power over how lengthy the output gets.

Completion Settings: Tools like OpenAI’s GPT-4 offer parameters such as ‘temperature’ and ‘max tokens’. By tuning these, you can manipulate the length and diversity of the AI’s responses. Think of it as the knobs and dials on your home thermostat, letting you adjust according to your comfort level.

In conclusion, prompt engineering can be as intricate as you make it. Mastering techniques to control the length of responses not only streamline your interaction with AI but also ensures that the results align with your expectations. And with practice, you’ll find the sweet spot between too little and too much information.

Techniques for Controlling the Creativity of Responses

An interesting facet of interacting with AI systems like GPT-4 is their ability to be creative. They can concoct elaborate stories, pen poems, or generate innovative ideas. Sometimes, we may want to limit the AI’s creativity for more controlled outputs. We will be exploring how to achieve this shortly.

Direct Instructions: One of the simplest ways is by giving direct instructions in the prompt itself. For a more factual response, you might include phrases like “provide a factual summary,” or “list the key points.” Remember, the AI is here to help and is programmed to follow your lead.

The ‘Temperature’ Dial: Think of the ‘temperature’ parameter in GPT-4 as a creativity dial. Higher values (closer to 1) make the AI more adventurous, while lower values (closer to 0) encourage it to play safe and stick to more predictable responses. It’s like asking an imaginative friend to either dream big or stick to the facts.

Prompt Refinement: A prompt can be shaped and reshaped to coax out the desired creativity level. For example, starting a prompt with “Imagine a world where…” might unlock more creative responses than “Describe the current state of…”. It’s like choosing the setting for a conversation — a fantasy novel or a news report.

In essence, mastering the control of creativity in AI responses is all about understanding the dials at your disposal and how your instructions are framed. While mastering this might involve a bit of trial and error, the potential scope of possibilities is vast. The key here is not merely to curtail the AI’s creativity, but to steer it towards your best benefit.

Techniques for Guiding the Sentiment of Responses

Being able to guide the sentiment of AI responses is a bit like being a director in a movie, setting the tone for the scenes. In the realm of GPT-4, a similar kind of tonal guidance is possible. Here are the techniques to achieve this.

Explicit Sentiment Instructions: The easiest method is to be direct. You might start your prompt with “Write a cheerful description of…” or “Provide a critical analysis of…”. This makes it clear you want a certain sentiment in the response.

Tonal Examples: A clever way to steer the sentiment is by providing an example within your prompt. For example, you might ask “Could you explain it like a caring teacher?” or “Could you argue like a tough courtroom lawyer?” This gives GPT-4 an emotional direction to follow.

Adjusting the Temperature: Yes, the temperature parameter can also influence sentiment. Cranking up the values might result in more enthusiastic or extreme replies, while dialing down values brings about a more composed, balanced tone.

As the director, you’re in charge of setting the tone, so it’s about clear directions and knowing what tools you’ve got. Mastering these will allow you to craft responses that not only provide information but also deliver it with the right sentiment. This adds an extra layer of customization to your AI experience, making GPT-4 a truly versatile tool in your tech toolbox.

Using Reinforcement Learning from Human Feedback (RLHF)

Reinforcement Learning from Human Feedback, or RLHF, is one technique that’s essential to harnessing the power of GPT-4. Despite sounding technical, it’s quite straightforward. Let’s simplify it.

What is RLHF?

In essence, RLHF is like giving your AI a coach. Humans evaluate and rate different AI responses, and this feedback is used to fine-tune the model’s behavior. It’s like the AI is learning by trial and error, with humans helping it understand what’s considered an error and what’s a success.

How does RLHF work?

- Human Evaluation: Initially, humans provide a variety of responses to a set of prompts. This helps establish a baseline of what “good” responses should look like.

- Reward Models: From these human evaluations, the system creates a “reward model,” a guide to help the AI understand which responses are preferable.

- Proximal Policy Optimization: The AI model is then updated through a process called Proximal Policy Optimization, which helps the AI learn how to respond better in the future based on the reward model.

Why use RLHF?

The use of RLHF allows GPT-4 to learn more effectively from human interaction, refining its responses and behavior over time. This makes it a fantastic tool for improving the quality of AI outputs, enabling the system to better adapt to our needs and preferences.

Remember, though, that while RLHF is a powerful tool, it’s not a silver bullet for all AI issues. It still requires careful management and calibration to ensure the AI model remains effective and doesn’t stray into undesirable outputs. But when used correctly, RLHF is a game-changer for developing more helpful and nuanced AI interactions.

Other Advanced Techniques

Beyond the techniques already discussed, the ever-evolving field of AI continues to introduce other methods to enhance GPT-4’s output. Here, we’ll dip our toes into some of these exciting, additional techniques that are pushing the boundaries of what AI can do.

- Few-Shot Learning: This method leverages the AI’s knack for picking up fast from a handful of examples, or “shots”. It means you could give GPT-4 a few samples of what you want (say, a style of writing), and it would endeavor to match that in its responses. It’s like quickly teaching the AI new tricks with just a few treats.

- Scaling Laws: These involve the relationship between the size and performance of AI models. Simply put, as GPT-4 gets larger (in terms of parameters), it generally performs better. Understanding this relationship helps in creating more powerful AI models. But remember, it’s not always about size – it’s how you use it.

- Transfer Learning: Transfer learning allows AI to apply knowledge learned in one context to another, different context. Imagine if an AI learns to identify cats in pictures, and then uses that understanding to identify cats in videos – that’s transfer learning in action.

- Fine-Tuning: This method involves adjusting an already trained model (like GPT-4) to perform better on specific tasks. You might visualize this process as akin to finely adjusting a musical instrument to hit the appropriate tones for a specific composition.

This analogy serves to illustrate the expansive flexibility and adaptability that current AI systems possess. As we witness the steady progress of technology, it’s fascinating to consider the new methods that could potentially augment our interactions with AI. Though we can’t predict with absolute certainty, the future of AI certainly appears intriguing. Stay tuned!

VII. Real-World Prompt Engineering

Prompt engineering isn’t just an intriguing academic pursuit – it’s a phenomenon having real-world implications on our everyday routines.

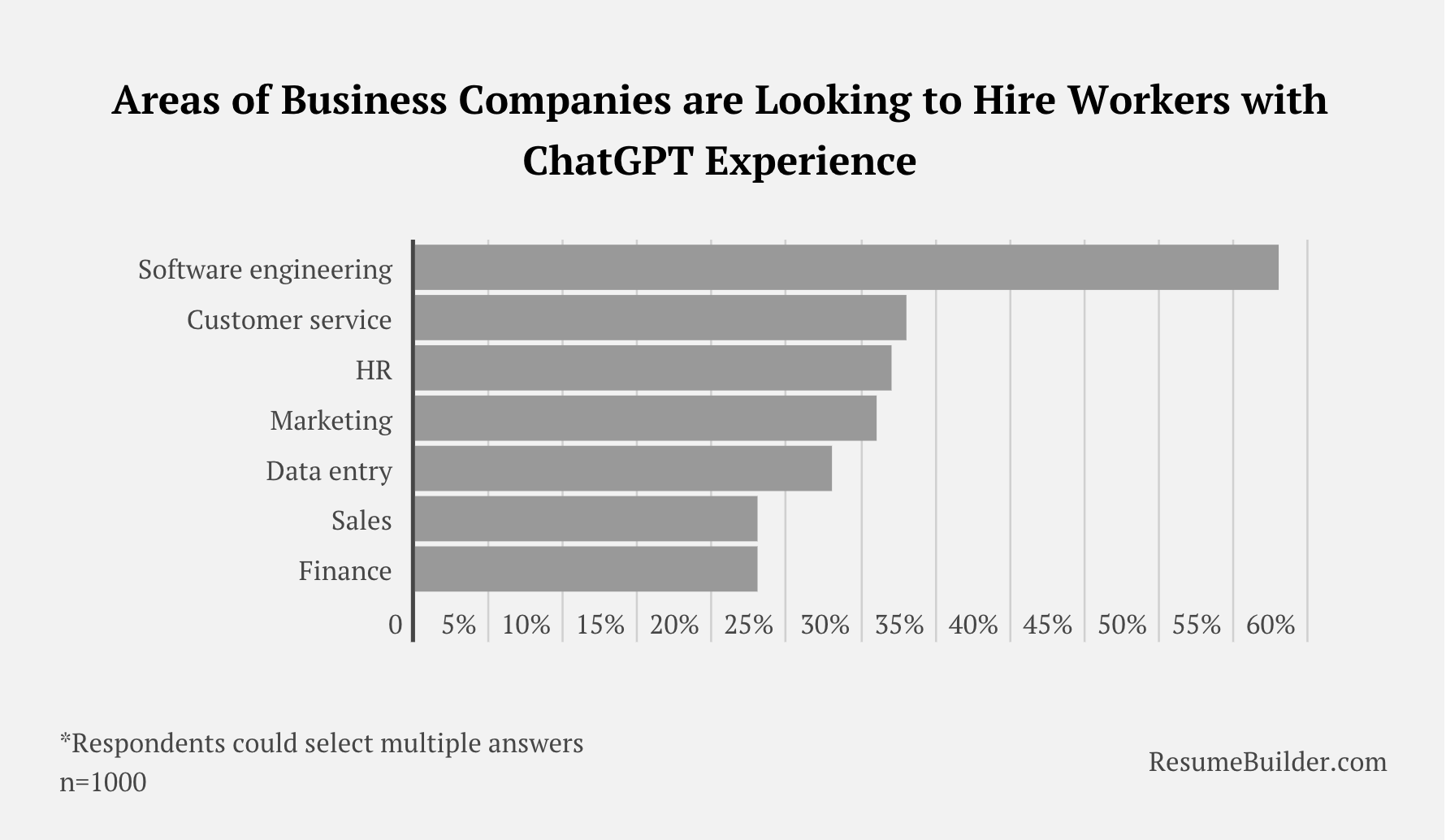

Data from a recent survey by ResumeBuilder, conducted in April 2023, suggests that this intersection between technology and practical application is only becoming more significant. The survey found that a staggering 91% of hiring business leaders across various sectors prefer candidates with experience in ChatGPT, seeing potential for saving resources and increasing productivity.

via ResumeBuilder

But how is this development shaping various industries? Let’s take a closer look and unpack the details.

1. Chatbots

One of the key areas where prompt engineering has made significant strides is in the operation of chatbots. Equipped with the ability to understand and respond to user prompts, chatbots are delivering unparalleled customer service experiences across industries.

By subtly manipulating prompts, companies can program chatbots to mimic their brand voice, while also ensuring they respond appropriately to customer queries. Whether it’s assisting with product queries, processing returns, or even offering personalized product suggestions, chatbots now offer a human-like interaction experience, thanks to advancements in prompt engineering.

2. Content Creation

Prompt engineering has also found its place in the world of content creation. From creating engaging blog articles to generating compelling social media posts, AI models have started to take over the reins.

By controlling the prompts, content creators can dictate not only the content but also the tone, style, and creativity of the AI-generated text. This allows them to generate a wide array of content that aligns perfectly with their brand identity and audience expectations.

3. Teaching and Training Programs

In the educational sector, prompt engineering has ushered in a new era of personalized learning. With the ability to modify the complexity and length of responses, AI-driven teaching aids can be customized to meet individual learner needs.

Enhancements in prompt engineering also allow AI to deliver creative and interactive learning experiences. Furthermore, by adjusting the sentiment of responses, AI can provide encouraging and supportive feedback, thus creating a conducive learning environment.

4. Product Recommendations

In the retail industry, prompt engineering plays a crucial role in product recommendation systems. By training AI models to interpret and respond to user prompts, businesses can deliver highly accurate and personalized product suggestions.

Prompt adjustments allow these systems to deliver recommendations that are not just relevant but also engaging, thus driving customer satisfaction and ultimately, sales.

5. Other Emerging Applications

Prompt engineering also holds significant promise in various other sectors. Whether it’s healthcare, where AI can provide personalized advice based on individual health prompts, or the entertainment industry where AI could generate storylines based on specific prompts, the potential applications are truly limitless.

VIII. Other LLM Models and Applications

The advent of large language models has marked a significant milestone in the field of artificial intelligence. Like ChatGPT that’s powered by the GPT model, there are many popular applications that are powered by various other LLMs. These models, trained on vast amounts of data, have the ability to generate human-like text, answer queries, and even create original content such as images or code. They have found applications in a wide range of fields. Let’s take a closer look at some of the most popular applications.

1. Google’s Bard

Google’s Bard, launched in March 2023, is a conversational AI chatbot that leverages the power of the LaMDA and PaLM LLMs. Developed as a direct response to the rise of OpenAI’s ChatGPT, Bard was initially released in the U.S. and the U.K., with a broader rollout following later. Bard is designed to collaborate with users, providing advanced math and reasoning skills, coding capabilities, and even the ability to generate creative content.

In recent updates from Google I/O 2023, Bard has been enhanced with image capabilities, coding features, and app integration. It is now available in over 180 countries and supports multiple languages. The model has also been upgraded to PaLM 2, enabling even more advanced features. This wide range of capabilities makes Bard a versatile tool for both personal and professional use.

2. Anthropic’s Claude 2

Claude 2 is the latest model from Anthropic, announced in July 2023, building on previous versions with significant jumps in performance, response length, and accessibility. It demonstrates improved conversational abilities, reasoning explanations, and reduced harmful outputs. Claude 2 has shown advances in coding, math, and analytical skills, scoring higher on exams like the Bar and GRE. Accessible via API and claude.ai, it exhibits enhanced technical documentation comprehension and writing capabilities. Claude 2 also demonstrates upgraded coding abilities, excelling at Python tests and grade-school math.

Emphasizing safety, Claude 2 is twice as effective at harmless responses versus Claude 1.3. It is currently used by companies like Jasper and Sourcegraph and growing quickly. Its semantic, reasoning, and contextual advancements make Claude 2 a valuable tool for individual and business use cases.

Please see this guide for how ChatGPT, Bard and Claude 2 compare.

3. OpenAI’s DALL-E

OpenAI’s DALL-E is a deep learning model designed to generate digital images from natural language descriptions. Launched in January 2021, DALL-E uses a version of GPT-3 modified to generate images. It can generate a wide variety of images based on the prompts it is given, demonstrating the ability to manipulate and rearrange objects in its images, fill in the blanks to infer appropriate details without specific prompts, and even produce images from various viewpoints with only rare failures.

In April 2022, OpenAI announced DALL-E 2, a successor designed to generate more realistic images at higher resolutions. With the introduction of DALL-E 2, the model can now produce more realistic images at higher resolutions. It also has the ability to produce “variations” of an existing image based on the original and can modify or expand upon it.

4. Midjourney

Midjourney is an AI image generation service created by the San Francisco lab Midjourney, Inc. Using generative AI, it produces images from natural language prompts, similar to DALL-E and Stable Diffusion, aiming to expand imagination and explore new mediums of thought. The latest v5.2 update brings enhanced image quality and a new high variation mode for increased compositional variety, though changes may occur without notice as development continues.

Midjourney is accessible through a Discord bot, and users can generate images using the /imagine command. The tool is also used for rapid prototyping of artistic concepts, and it has sparked some controversy among artists who feel their original work is being devalued. Despite facing litigation over copyright infringement claims, Midjourney continues to evolve, transitioning to an AI-powered content moderation system and offering a paid subscription service.

5. Stable Diffusion by Stability AI

Stable Diffusion is a deep learning image generation model developed in 2022 by Stability AI. As a latent diffusion model, it can create detailed images from text prompts, excelling at tasks like inpainting, outpainting, and image-to-image translation. Unlike previous proprietary text-to-image models, Stable Diffusion’s code and weights are public, making it widely accessible.

The model was trained on pairs of images and captions from LAION-5B. Limitations include struggling with human limbs due to training data quality and requiring fine-tuning for new use cases. However, it can generate new images from scratch via text and modify existing images. Despite controversy over ownership ethics and potential bias, its creators believe the technology will provide net benefit.

6. Perplexity AI

Perplexity AI is an innovative AI-powered search engine providing direct, up-to-date answers by searching the web in real-time. A key feature is Copilot, an interactive AI companion powered by GPT-4 that enhances the search via clarifying questions and result summaries. Other features include context-aware responses, cited sources, real-time searching, and a conversational interface. As noted in our review, Perplexity AI’s capabilities as a dynamic search tool are unmatched, quickly and easily giving reliable information.

The platform is accessible via web and offers free and premium versions. Users can sign up for premium features and engage the AI through an intuitive chatbot format. While conversational abilities may lag other chatbots, unique features like Copilot and cited sources make it a powerful solution for quick access to credible data.

7. GitHub Copilot

GitHub Copilot is an AI tool developed by GitHub and OpenAI, designed to assist users by autocompleting code in various IDEs. It was first announced in June 2021 and is currently available by subscription. The tool is powered by OpenAI Codex, a descendant of OpenAI’s GPT-3 model, fine-tuned for programming applications.

As OpenAI released stronger models, GitHub Copilot improved and gained new capabilities. In March 2023, GitHub announced GitHub Copilot X, their vision for an AI-powered developer experience extending beyond the IDE. Despite concerns with its security, educational impact, and potential copyright violations, GitHub Copilot and OpenAI Codex continue to evolve, aiming to enhance the developer experience.

IX. Conclusion

Let’s bring it all home: prompt engineering is basically our magic wand to make AI even cooler and more useful. With AI tech moving at warp speed, prompt engineering is set to be an even bigger player in the game. It’s all about making our AI chats feel personalized, spot-on, and able to roll with the punches, something we’re all surely pumped about.

In summary, prompt engineering is a key area to watch in the fast-paced world of AI. Whether you’re a tech enthusiast or just interested in future trends, it’s worth keeping track of as we delve deeper into our AI-driven lives.

Share This Post

Ada Rivers

Ada Rivers is a senior writer and marketer with a Master’s in Global Marketing. She enjoys helping businesses reach their audience. In her free time, she likes hiking, cooking, and practicing yoga.

Allow cookies

This website uses cookies to enhance the user experience and for essential analytics purposes. By continuing to use the site, you agree to our use of cookies.