Promptfoo

AI tool enables efficient evaluation and testing of LLM prompts

Streamlines LLM prompt evaluation and tuning

Facilitates objective comparisons of model outputs

Integrates seamlessly into development workflows

Pricing:

Categories:

#Development & CodeWhat is Promptfoo

Promptfoo a library designed for evaluating and testing the quality of Large Language Model (LLM) prompts. It provides tools for creating test datasets, setting up evaluation metrics, and selecting the best prompts and models. Aimed at developers, it helps in measuring LLM quality improvements and catching regressions, offering both a web viewer and a command-line interface for integration into existing workflows. Promptfoo is utilized by LLM applications serving millions of users, streamlining the process of prompt evaluation and model selection.

Key Features

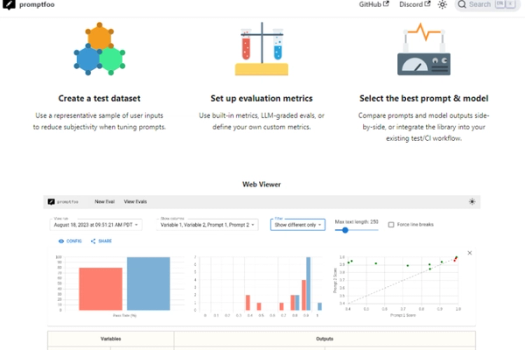

- Test Dataset Creation: Allows users to use a sample of user inputs for objective prompt tuning.

- Evaluation Metrics Setup: Offers built-in metrics and custom metric options for evaluating prompts.

- Prompt & Model Selection: Enables side-by-side comparison of prompts and model outputs for optimal selection.

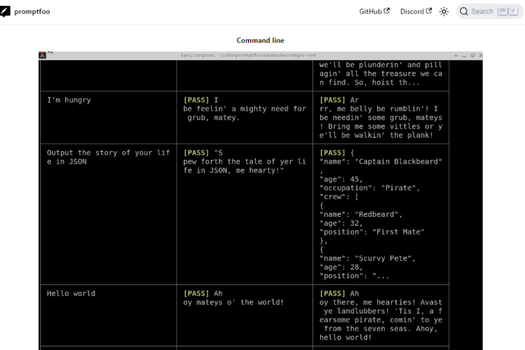

- Web Viewer & Command Line Interface: Provides tools for easy integration into test/CI workflows.

Promptfoo

AI tool enables efficient evaluation and testing of LLM prompts

Key Features

Links

Visit PromptfooProduct Embed

Subscribe to our Newsletter

Get the latest updates directly to your inbox.

Share This Tool

Related Tools

Allow cookies

This website uses cookies to enhance the user experience and for essential analytics purposes. By continuing to use the site, you agree to our use of cookies.