Parea AI

An experiment tracking and human annotation platform for LLM applications

Experiment tracking for LLM app development

Human feedback for model fine-tuning

Integrates with major LLM providers and frameworks

Pricing:

Features:

Categories:

#Development & CodeWhat is Parea AI

Parea AI is a platform designed to aid teams in building production-ready LLM applications through experiment tracking and human annotation. It provides tools for testing, tracking performance, collecting human feedback, and deploying prompts. Integration with major LLM providers and frameworks makes it versatile for various development needs. The platform offers different pricing plans catering to teams of all sizes.

Key Features of Parea AI

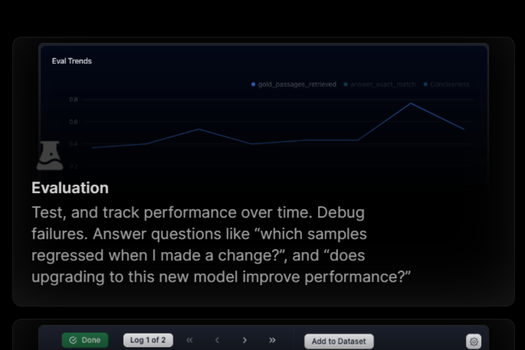

- Experiment Tracking: Test, track performance over time, and debug failures to ensure optimal functionality.

- Human Annotation: Collect and integrate human feedback from end users, experts, and product teams for Q&A and fine-tuning.

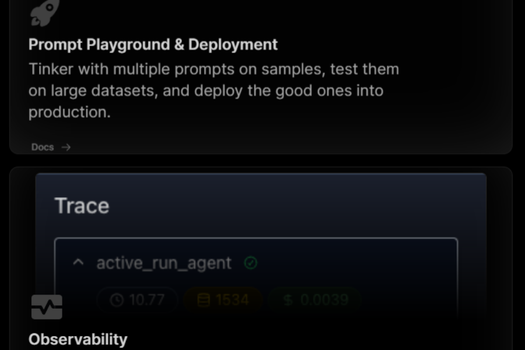

- Prompt Playground & Deployment: Experiment with multiple prompts, test on large datasets, and deploy effective ones into production.

- Observability: Log data from production and staging, debug issues, run online evaluations, and capture user feedback—all in one place.

- Dataset Management: Incorporate logs from staging and production into test datasets for model fine-tuning.

- Python & JavaScript SDKs: Simple SDKs for popular languages to auto-trace LLM calls and run tests on datasets.

- Native Integrations: Seamlessly integrate with major LLM providers and frameworks, including OpenAI, Anthropic, LangChain, and more.

- Enterprise-Level Features: Includes on-prem/self-hosting, support SLAs, unlimited logs, SSO enforcement, custom roles, and enhanced security and compliance features.

Pricing

Builder Plan:

- Cost: Free

- Max. 2 team members

- 3k logs per month

- 25 deployed prompts

- Access to all platform features

- Discord community for support

- No credit card required to start

Team Plan:

- Cost: $150/month (includes 3 members; additional members $50/month each, up to 20 members)

- 100k logs per month ($0.001 per extra log)

- Unlimited projects

- Unlimited deployed prompts

- Private Slack channel for support

Enterprise Plan:

- Custom Pricing

- On-prem/self-hosting options

- Support Service Level Agreements (SLAs)

- Unlimited logs

- Single Sign-On (SSO) enforcement and custom roles

- Additional security and compliance features

- Direct consultation with founders

Parea AI

An experiment tracking and human annotation platform for LLM applications

Key Features

Links

Visit Parea AIProduct Embed

Subscribe to our Newsletter

Get the latest updates directly to your inbox.

Share This Tool

Related Tools

Allow cookies

This website uses cookies to enhance the user experience and for essential analytics purposes. By continuing to use the site, you agree to our use of cookies.