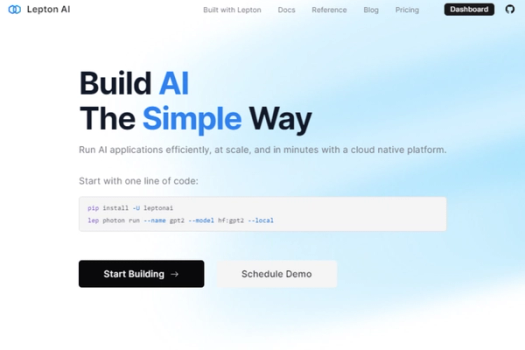

Lepton

Run scalable AI applications with Lepton's simple cloud-native platform

Cloud-native AI platform

Efficiently run AI at scale

Simple commands for quick model building

Pricing:

Features:

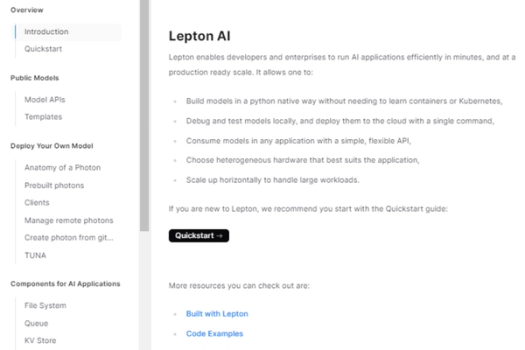

What is Lepton

Lepton AI offers a cloud-native platform designed to run AI applications efficiently and at scale. Users can start building AI models quickly using simple commands, making it accessible for developers to integrate AI solutions. The platform supports various robust models, including tools for speech recognition and language processing, facilitating diverse AI applications.

Key Features of Lepton

- Cloud-Native Platform: Run AI applications efficiently and at scale in minutes.

- Easy Initialization: Start with a single line of code: `pip install -U leptonai`.

- Flexible Model Execution: Quickly run popular language models using straightforward commands like `lep photon run --name gpt2 --model hf:gpt2 --local`.

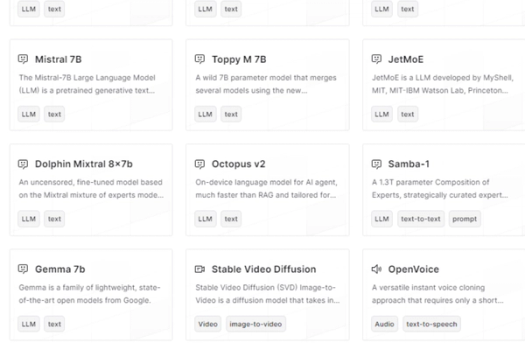

- Speech Recognition: Utilize WhisperX for robust speech recognition powered by large-scale weak supervision resources.

- Language Model Support: Seamlessly run various advanced language models such as Mixtral 8x7b and Llama2 13b.

- Interactive Interface: Record and interact using the platform's integrated tools.

- Open Source Accessibility: Leverage the rapidly growing open-source AI platform, Stable Diffusion XL, for creative and innovative AI projects.

- Support and Consultation: Options to start building immediately or schedule a demo for more in-depth support.

Pricing

Basic Plan:

- Cost: $0/month

- Features: No subscription fee, up to 48 CPUs and 2 GPUs concurrently.

- Ideal for: Individuals and small teams to get started.

Standard Plan:

- Cost: $30/month

- Features: Multi-user support for collaboration, custom runtime environments, dedicated account manager, up to 192 CPUs and 8 GPUs concurrently, unlimited rate model APIs.

- Ideal for: Collaborative teams and growing businesses.

Enterprise Plan:

- Cost: Custom pricing

- Features: All features of the Standard plan plus custom integration and support, self-hosted deployments, dedicated API support for control plane, audit log and RBAC (coming soon), prioritizing requests on the roadmap.

- Ideal for: Organizations needing high levels of SLAs, performance, and compliance.

Compute Costs:

- CPU Options:

- cpu.small (1 CPU, 4 GB) - $0.0495/hour

- cpu.medium (2 CPUs, 8 GB) - $0.099/hour

- cpu.large (4 CPUs, 16 GB) - $0.198/hour

Lepton

Run scalable AI applications with Lepton's simple cloud-native platform

Key Features

Links

Visit LeptonProduct Embed

Subscribe to our Newsletter

Get the latest updates directly to your inbox.

Share This Tool

Related Tools

Allow cookies

This website uses cookies to enhance the user experience and for essential analytics purposes. By continuing to use the site, you agree to our use of cookies.